Once upon a time, I did a blog posting about why, when we test digital audio systems, we typically use a 997 Hz sine wave instead of a 1000 Hz tone.

The short version of this is the following:

Let’s say that I digitally create a (not-dithered) 1000 Hz sine wave at 0 dB FS in a 16-bit system running at 48 kHz. This means that every second, there are exactly 1000 cycles of the wave, and since there are 48,000 samples per second, this, in turn means that there is one cycle every 48 samples, so sample #49 is identical to sample #1.

So, we are only testing 48 of the possible 2^16 ( = 65,536) quantisation values, right?

Wrong. It’s worse than you think.

If we zoom in a little more, we can see that Sample #1 = 0 (because it’s a sine wave). Sample #25 is also equal to 0 (because 48,000 / 1,000 is a nice number that is divisible by 2).

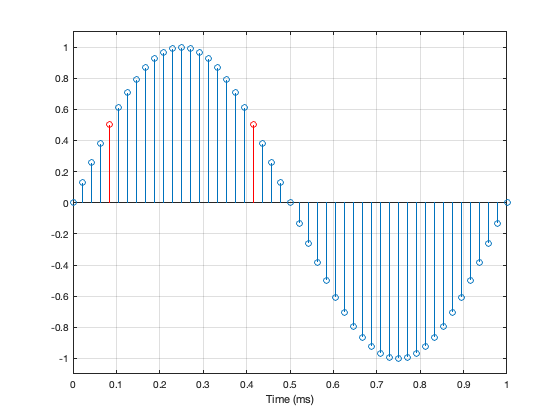

Unfortunately, 48,000 / 1,000 is a nice number that is also divisible by 4. So what? This means that when the sine wave goes up from 0 to maximum, it hits exactly the same quantisation values as it does on the way from maximum back down to 0. For example, in the figure below, the values of the two samples shown in red are identical. This is true for all symmetrical points in the positive side and the negative side of the wave.

Jumping ahead, this means that, if we make a “perfect” 1 kHz sine wave at 48 kHz (regardless of how many bits in the system) we only test a total of 25 quantisation steps. 0, 12 positive steps, and 12 negative ones.

Not much of a test – we only hit 25 out of a possible 65,546 values in a 16-bit system (or 25 out of 16,777,216 possible values in a 24-bit system).

What if I wanted to make a signal that tested ALL possible quantisation values in an LPCM system? One way to do this is to simply make a linear ramp that goes from the lowest possible value up to the highest possible value, step by step, sample by sample. (of course, there are other ways, but it doesn’t matter… we’re just trying to hit every possible quantisation value…)

How long would it take to play that test signal?

First we convert the number of bits to the number of quantisation steps. This is done using the equation 2^bits. So, you get the following results

| Number of Bits | Number of Quantisation Steps |

| 16 | 65,536 |

| 24 | 16,777,216 |

| 32 | 4,294,967,296 |

If the value of each sample has a different quantisation value, and we play the file at the sampling rate then we can calculate the time it will take by dividing the number of quantisation steps by the sampling rate. This results in the following:

| Sampling Rate (kHz) | 16 Bits | 24 Bits | 32 Bits |

| 44.1 | 1.5 seconds | 6.4 minutes | 27.1 hours |

| 48 | 1.4 seconds | 5.8 minutes | 24.9 hours |

| 88.2 | 0.7 seconds | 3.2 minutes | 13.5 hours |

| 96 | 0.7 seconds | 2.9 minutes | 12.4 hours |

| 176.4 | 0.4 seconds | 1.6 minutes | 6.8 hours |

| 192 | 0.3 seconds | 1.5 minutes | 6.2 hours |

| 352.8 | 0.2 seconds | 47.6 seconds | 3.4 hours |

| 384 | 0.2 seconds | 43.7 seconds | 3.1 hours |

| 705.6 | 0.1 seconds | 23.8 seconds | 1.7 hours |

| 768 | 0.1 seconds | 21.8 seconds | 1.6 hours |

So, the moral of the story is, if you’re testing the validity of a quantiser in a 32-bit fixed-point system, and you’re not able to do it off-line (meaning that you’re locked to a clock running at the correct sampling rate) you’d either (1) hope that it’s also a crazy-high sampling rate or (2) that you’re getting paid by the hour.

Why I am thinking about this?

I often get asked for my opinion about audio players; these days, network streamers especially, since they’re in style.

Let’s say, for example, that someone asked me to recommend a network streamer for use with their system. In order to recommend this, I need to measure it to make sure it behaves.

One of the tests I’m going to run is to ensure that every sample value on a file is accurately output from the device. Let’s also make it simple and say that the device has a digital output, and I only need to test 3 LPCM audio file formats (WAV, AIFF and FLAC – since those can be relied to give a bit-for-bit match from file to output). (We’ll also pretend that the digital output can support a 32-bit audio word…)

So, to run this test, I’m going to

- create test files that I described above (checking every quantisation value at all three bit depths and all 10 sampling rates)

- play them

- record them

- and then compare whether I have a bit-for-bit match from input (the original file) to the output

If you add up all the values in the table above for the 10 sampling rates and the three bit depths, then you get to a total of 4.2 DAYS of play time (playing audio constantly 24 hours a day) per file format.

So, say I wanted to test three file formats for all of the sampling rates and bit depths, then I’m looking at playing & recording 12.6 days of audio – and then I can start the analysis.

REALLY‽

Of course this is silly… I’m not going to test a 32-bit, 44.1 kHz file… In fact, if I don’t bother with the 32-bit values at all, then my time per file format drops from 4.2 days down to 23.7 minutes of play time, which is a lot more feasible, but less interesting if I’m getting paid by the hour.

However, it was fun to calculate – and it just goes to show how big a number 2^32 is…