Three decades of Music Distribution

Check this link for an interesting way to visualise how CD swallowed up cassettes, and how, in 2008, music distribution reached its pinnacle when 11.1% of the market was comprised of ringtone sales… Sigh…

Bang & Olufsen BeoVision Avant Reviews

I was responsible for the final sound design (aka tonal balance) of the loudspeakers built into the BeoVision Avant. So, I’m happy to share some of the blame for some of the comments (at least on the sound quality) from the reviews.

from Home Cinema Choice magazine

“Where the Avant really gets space-age, though, is with … its ability to drive 21 audio channels ”

“This effort by the integrated speakers , together with its unprecedented audio flexibility,makes the Avant the finest sounding TV I’ve ever heard.”

from TrustedReviews.com

“Even a high-end sound bar would struggle to match the gorgeous finesse the Avant combines with its raw power. The speakers reproduce soundtrack subtleties more precisely and elegantly than any other TV we’ve heard. And they do so no matter how dense the soundstage becomes, and without so much as a hint of treble harshness.”

“Then there’s that rear-mounted subwoofer. We had worried that the way this angled subwoofer fires up and out through an actually quite narrow vent could cause boominess or distortion, but not a bit of it. Instead very impressive and well-rounded amounts of bass meld immaculately into the bottom end of the wide mid-range delivered by those terrific left, right and centre speakers.”

from flatpanelshd.com

“Compared to all other TVs on the market (non-B&O) there is no competition. Sound is so much better. However, we also have to point out that the TV did not receive the best conditions for a proper audio demonstration.“

The best thing I learned today

Bang & Olufsen BeoLab 18 Reviews

I was part of the development team, and one of the two persons who decided on the final sound design (aka tonal balance) of the B&O BeoLab 18 loudspeakers. So, I’m happy to share some of the blame for some of the comments (at least on the sound quality) from the reviews.

Bernard Dickinson at Live Magazines said:

“The sound reproduction is flawless”

Lyd & Billede’s August 2014 review said

“Lydkvaliteten er rigtig god med en åben, distinkt og fyldig gengivelse, som ikke gør højopløste lydformater til skamme.” (The sound quality is very good with an open, clear and detailed reproduction, which do not put high-resolution audio formats to shame.)

and ”Stemmerne er lige klare og tydelige, hvad enten vi sidder lige i smørhullet eller befinder os langt ude i siden. Det er faktisk ret usædvanligt og gør, at BeoLab 18 egner sig lige godt til både baggrundsmusik og aktiv lytning” (The voices are crisp and clear, whether we are sitting right in the sweet spot or far off to the side. It’s actually quite unusual and makes the BeoLab 18 equally suited for both background music and active listening)

B&O Tech: Combinatorics

#26 in a series of articles about the technology behind Bang & Olufsen loudspeakers

I occasionally drop in to read and comment on the fora at www.beoworld.org. This is a group of Bang & Olufsen enthusiasts who, for the most part, are a great bunch of people, are very supportive of the brand (and yet, like any good family member, are not afraid to offer constructive criticism when it’s warranted…) Anyways, during one discussion about Speaker Groups in the BeoVision Avant, BeoVision 11, BeoPLay V1 and BeoSystem 4, the following question came up:

Speaker Groups

Would be interesting to know how many different ‘Speaker Group constellations’ you actually could make with the new engine. Of course you are limited to 10, if you want to save them. But I guess that should be enough for most of us.

This got me thinking about exactly how many possible combinations of parameters there are in a single Speaker Group in our video products. As a result, I answered with the response copied-and-pasted below:

Multiply the number of audio channels you have (internal + external) by 17 (the total number of possible speaker roles not including subwoofers, but including NONE as an option in in case you want to use a subset of your loudspeakers) or 22 (the total number of possible speaker roles including subwoofers) to get the total number of Loudspeaker Constellations.

If you want to include Speaker Level and Speaker Distance, then you will have to multiply the previous result by 301 (possible distances for each loudspeaker) and multiply again by 61 (the total number of Speaker Levels) to get the total number of possible Configurations.

This means:

If you have a BeoPlay V1 (with 2 internal + 6 external outputs) the answers are

- 136 constellations without a subwoofer, or

- 176 constellations with subwoofers, and

- a maximum of 3,231,536 possible Speaker Groups Configurations (including Levels and Distances)

If you have a BeoVision 11 without Wireless (2+10 channels), then the totals are

- 204 constellations without a subwoofer, or

- 264 constellations with subwoofers, and

- 4,847,304 possible Speaker Groups Configurations (including Levels and Distances)

If you have a BeoVision 11 with Wireless (2+10+8 channels), then the totals are

- 340 constellations without a subwoofer, or

- 440 constellations with subwoofers, and

- 8,078,840 possible Speaker Groups Configurations (including Levels and Distances)

If you have a BeoVision Avant (3+10+8 channels), then the totals are

- 357 constellations without a subwoofer, or

- 462 constellations with subwoofers, and

- 8,482,782 possible Speaker Groups Configurations (including Levels and Distances)

Note that these numbers are FOR EACH SPEAKER GROUP. So you can multiply each of those by 10 (for the number of Speaker Groups you have available in your TV). The reader is left to do this math on his/her own.

Note as well that I have not included the Speaker Roles MIX LEFT, MIX RIGHT – for those of you who are using your Speaker Groups to make headphone outputs – you know who you are… ;-)

Note as well that I have not included the possibilities for the Bass Management control.

Sound Modes

This also got me thinking about the total number of possible combinations of settings there are for the Sound Modes in the same products. In order to calculate this, you start with the list of the parameters and their possible values which is listed below:

- Frequency Tilt: 21

- Sound Enhance: 21

- Speech Enhance: 11

- Loudness On/Off: 2

- Bass Mgt On/Off: 2

- Balance: 21

- Fader: 21

- Dynamics (off, med, max): 3

- Listening Style: 2

- LFE Input on/off: 2

- Loudness Bass: 13

- Loudness Treble: 13

- Spatial Processing: 3

- Spatial Surround: 11

- Spatial Height: 11

- Spatial Stage Width: 11

- Spatial Envelopment: 11

- Clip Protection On/Off: 2

Multiply all those together and you get 1,524,473,211,092,832 different possible combinations for the parameters in a Sound Mode.

Note that this does not include the global Bass and Treble controls which are not part of the Sound Mode parameters.

However, it’s slightly misleading, since some parameters don’t work in some settings of other parameters. For example:

All four Spatial Controls are disabled when the Spatial Processing is set to either “1:1” or “downmix” – so that takes away 29282 combinations.

If Loudness is set to Off, then the Loudness Bass and Loudness Treble are irrelevant – so that takes away 169 combinations.

So, that reduces the total to only 1,524,473,211,063,381 total possible parameter configurations for a single Sound Mode.

Finally, this calculation assumes that you have all output Speaker Roles in use. For example, if you don’t have any height loudspeakers, then the Spatial Height control won’t do anything useful.

If you’d like more information on what these mean, please check out the Technical Sound Guide for Bang & Olufsen video products downloadable from this page.

B&O Tech: Where great sound starts

#25 in a series of articles about the technology behind Bang & Olufsen loudspeakers

You’ve bought your loudspeakers, you’ve connected your player, your listening chair is in exactly the right place. You sit down, put on a new recording, and you don’t like how it sounds. So, the first question is “who can I blame!?”

Of course, you can blame your loudspeakers (or at least, the people that made them). You could blame the acoustical behaviour of your listening room (that could be expensive). You could blame the format that you chose when you bought the recording (was it 128 kbps MP3 or a CD?). Or, if you’re one of those kinds of people, you could blame the quality of the AC mains cable that provides the last meter of electrical current supply to your amplifier from the hydroelectric dam 3000 km away Or you could blame the people who made the recording.

In fact, if the recording quality is poor (whatever that might mean) then you can stop worrying about your loudspeakers and your room and everything else – they are not the weakest link in the chain.

So, this week, we’ll talk about who those people are that made your recording, how they did it, and what each of them was supposed to look after before someone put a CD on a shelf (or, if you’re a little more current, put a file on a website).

Recording Engineer

The recording engineer is the person you picture of when you think about a recording session. You have the musicians in the studio or the concert hall, singing and playing music. That sound travels to microphones that were set up by a Recording Engineer who then sits behind a mixing console (if you’re American – a “mixing desk” if you’re British) and fiddles with knobs obsessively.

There’s a small detail here that we should not overlook. Generally speaking, a “recording engineer” has to do two things that happen at different times in the process of making a recording. The first is called “tracking” and the second is called “mixing”.

Tracking

Normally, bands don’t like playing together – sometimes because they don’t even like to be in the same room as each other. Sometimes schedules just don’t work out. Sometimes the orchestra and the soloist can’t be in the same city at the same time.

In order to circumvent this problem, the musicians are recorded separately in a process called “tracking”. During tracking, each musician plays their part, with or without other members of the band or ensemble. For example, if you’re a rock band, the bass and the drummer usually arrive first, and they play their parts. In the old days, they would have been recorded to seaport tracks on a very wide (2″!) magnetic tape (hence the term “tracking”) where each instrument is recorded on a separate track. That way, the engineer has a separate recording of the kick drum and the snare drum and each tom-tom and each cymbal, and so on and so on. Nowadays, most people don’t record to magnetic tape because it’s too expensive. Instead, the tracks are recorded on a hard disc on a computer. However, the process is basically the same.

Once the bass player and the drummer are done, then the guitarist comes into the studio to record his or her parts while listening to the previously-recorded bass and drum parts over a pair of headphones. Then the singer comes in and listens to the bass, drums and guitar and sings along. Then the backup vocalists come in, and so on and so on, until everyone has recorded their part.

During the tracking, the recording engineer sets up and positions the microphones to get the optimal sound for each instrument. He or she will make sure that the gain that is applied to each of those microphones is correct – meaning that it’s recorded at a level that is high enough be mask the noise floor of the electronics and the recording medium, but not so high that it distorts. In the old days, this was difficult because the dynamic range of the recording system was quite small – so they had to stay quite close to the ceiling all the time – sometimes hitting it. Nowadays, it’s much easier, since the signal paths have much wider dynamic ranges so there’s more room for error.

In the case of a classical recording, it might be a little different for the musicians, but the technical side is essentially the same. For example, an orchestra will play (so you don’t bring in the trombone section first – everyone plays together) with a lot of microphones in the room. Each microphone will be recorded on its own individual track, just like with the rock band. The only difference is that everyone is playing at the same time.

Once all the tracking is done the musicians are finished. They’ve all been captured, each on their own track that can be played back later in isolation (for example, you can listen to just the snare drum, or just the microphone above the woodwind section). Sometimes, they will even have played or sung their part more than once – so we have different versions or “takes” to choose from later. This means that there may be hundreds of tracks that all need to be mixed together (or perhaps just left out…) in order to make something that normal people can play on their stereo.

Mixing

Now that all the individual tracks are recorded, they have to be combined into a pretty package that can be easily delivered to the customers. This means that all of those individual tracks that have been recorded have to be assembled or “mixed” together into a version that has, say, only two channels – one for the left loudspeaker and one for the right loudspeaker. This is done by feeding each individual track to its own input on a mixing console and listening to them individually to see how they best fit together. This is called the “mixing” process. During this stage, basic decisions are made like “how loud should the vocals be relative to the guitars (and everything else)”. However, it’s a little more detailed than that. Each track will need its own processing or correction (maybe changing the equalisation on the snare drum – or altering the attack and decay of the bass guitar using a dynamic range compressor – or the level of the vocal recording is changed throughout the tune to compensate for the fact that the singer couldn’t stay the same distance from the microphone whilst singing…) that helps it to better fit into the final mix.

If you walk into the control room of a recording studio during a mixing session, you’d see that it looks almost exactly like a recording session – except that there are no musicians playing in the studio. This is because what you usually see on videos like this one is a tracking session – but the recording engineer usually does a “rough mix” during tracking – just to get a preliminary idea of how the puzzle will fit together during mixing.

Once the mixing session for the tune is finished, then you have a nearly-finished product. You at least have something that the musicians can take home to have a listen to see if they’re satisfied with the overall product so far.

Editing

In classical music there is an extra step that happens here. As I said above, with classical recordings, it’s not unusual for all the musicians to play in the same room at the same time when the tracking is happening. However, it is unusual that they are able to play all the way through the piece without making any mistakes or having some small issues that they want to fix. So, usually, in a classical recording, the musicians will play through the piece (or the movement) all the way through 2 or 3 times. While that happens, a Recording Producer is sitting in the control room, listening and making notes on a copy of the score. Each time there is a mistake, the producer makes a note of it – usually with a red make indicating the Take Number in which the mistake was made. If, after 2 or 3 full takes of the piece, there are points in the piece that have not been played correctly, then they go back and fix small bits. The ensemble will be asked to play, say 5 bars leading up to the point that needs fixing – and to continue playing for another 5 bars or so.

Later, those different takes (either full recordings, or bits and pieces) will be cut and spliced together in a process called editing. In the old days, this was done using a razor blade to cut the magnetic tape and stick it back together. For example, if you listen to some of Glen Gould’s recordings, you can hear the piano playing along, but the tape hiss changes suddenly in the background noise. This is the result of a splice between two different recordings – probably made on different days or with different brands of tape. Nowadays, the “splicing” is done on a computer where you fade out of one take and fade into another gradually over 10 ms or so.

If the editing was perfect, then you’ll never hear that it happened. Sometimes, however, it’s possible to hear the splice. For example, listen to this recording and listen to the overall level and general timbre of the piano. It changes to a quieter, duller sound from about 0′ 27″ to about 0´ 31″. This is a rather obvious tape splice to a different recording than the rest of the track.

Mastering Engineer

The final stage of creating a recording is performed by a Mastering Engineer in a mastering studio. This person gets the (theoretically…) “finished” product and makes it better. He or she will sit in a room that has very little gear in it, listening to the mixed song to hear if there are any small things that need fixing. For example, perhaps the overall timbre of the tune needs a little brightening or some control of the dynamic range.

Another basic role of the mastering engineer is to make sure that all of the tracks on a single album sound about the same level – since you don’t want people sitting at home fiddling with the volume knob from tune to tune.

When the mastering engineer is done, and the various other people have approved the final product, then the recording is finished. All that is left to do is to send the master to a plant to be pressed as a CD – or uploaded to the iTunes server – or whatever.

In other words the Mastering Engineer is the last person to make decisions about how a recording should sound before you get it.

This is why, when I’m talking to visitors, I say that our goal at Bang & Olufsen is to build loudspeakers that perform so that you, in your listening room, hear what the mastering engineer heard – because the ultimate reference of how the recording should sound is what it sounded like in the mastering studio.

Appendicies

What’s a producer?

The title of Recording Producer means different things for different projects. Sometimes, it’s the person with the money who hires everyone for the recording.

Sometimes (usually in a pop recording) it’s the person sitting in the control room next to the recording engineer who helps the band with the arrangement – suggesting where to put a guitar solo or where to add backup vocals. Some pop producers will even do good ol’ fashioned music arrangements.

A producer for a classical recording usually acts as an extra set of ears for the musicians through the recording process. This person will also sit with the recording engineer in the control room, following the score to ensure that all sections of the piece have been captured to the satisfaction of the performers. He or she may also make suggestions about overall musical issues like tempi, phrasing, interpretation and so on.

But what about film?

The basic procedure for film mixing is the same – however, the “mixing engineer” in a film world is called a “re-recording engineer”. The work is similar, but the name is changed.

So that’s a “Tonmeister”?

A tonmeister is a person who can act simultaneously as a Recording Engineer and a Recording Producer. It’s a person who has been trained to be equally competent in issues about music (typically, tonmeisters are also musicians), acoustics, electronics, as well as recording and studio techniques.

A good explanation of dither

A great site for beginning DSP geeks

B&O Tech: How loud are my headphones?

- #24 in a series of articles about the technology behind Bang & Olufsen loudspeakers

As you may already be aware, Bang & Olufsen makes headphones: the BeoPlay H6 over-ear headphones and the BeoPlay H3 earbuds.

If you read some reviews of the H6 you’ll find some reviewers like them very much and say things like “…excellent clarity and weight, well-defined bass and a sense of openness and space unusual in closed-back headphones. The sound is rich, attractive and ever-so-easy to enjoy.” and “… by no means are these headphones designed only for those wanting a pounding bass-line and an exciting overall balance: as already mentioned the bass extension is impressive, but it’s matched with low-end definition and control that’s just as striking, while a smooth midband and airy, but sweet, treble complete the sonic picture.” (both quotes are from Gramophone Magazine’s April 2014 issue). However, some other reviewers say things like “My only objection to the H6s is their volume level is not quite as loud as I normally would expect.” (A review from an otherwise-satisfied on Amazon.com). And, of course, there are the people whose tastes have been influenced by the unfortunate trend of companies selling headphones with a significantly boosted low-frequency range, and who now believe that all headphones should behave like that. (I sometimes wonder if the same people believe that, if it doesn’t taste like a Big Mac, it’s not a good burger… I also wonder why they don’t know that it’s possible to turn up the bass on most playback devices… But I digress…)

For this week’s posting, I’ll just deal with the first “complaint” – how loud should a pair of headphones be able to play?

Part 1: Sensitivity

One of the characteristics of a pair of headphones, like a passive loudspeaker, is its sensitivity. This is basically a measurement of how efficient the headphones are at converting electrical energy into acoustical output (although you should be careful to not confuse “Sensitivity” with “Efficiency” – sensitivity is a measure of the sound pressure level or SPL output for the voltage at the input whereas efficiency is a measure of the SPL output for the power in milliwatts). The higher the sensitivity of the headphones, the louder they will play for the same input voltage.

So, if you have a pair of headphones that are not very sensitive, and you plug them into your smartphone playing a tune at full volume, it might be relatively quiet. By comparison, a pair of very sensitive headphones plugged into the same smartphone playing the same tune at the same volume might be painfully loud. For example,let’s look at the measured data for three not-very-randomly selected headphones at https://www.stereophile.com/content/innerfidelity-headphone-measurements.

| Brand | Model | Vrms to produce 90 dB SPL | dBV to produce 90 dB SPL |

| Sennheiser | HD600 | 0.230 | -12.77 |

| Beoplay | H6 | 0.044 | -27.13 |

| Etymotic | ER4PT | 0.03 | -30.46 |

If we do a little math, this means that, for the same input voltage, the Etymotic’s will be 3.3 dB louder than the H6’s and the Sennheiser’s will be 14.4 dB quieter. This is a very big difference. (The Etymotic’s are 7.7 times louder than the Sennheisers!)

So, in other words, different headphones have different sensitivities. Some will be quieter than others – some will be louder.

Side note: If you want to compare different pairs of headphones for output level, you could either look them up at the stereophile.com site I mentioned above, or you could compare their data sheet specifications using the Sensitivity to Efficiency converter on this page.

The moral of this first part of the story is that, when someone says “these headphones are not very loud” – the question is “compared to what?”

Part 2: The Source

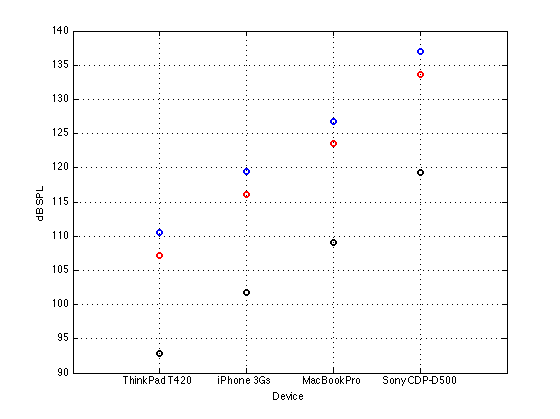

I guess it goes without saying, but if you want more out of your headphones, the easiest solution is to turn up the volume of your source. The question then is: how much output can your source deliver? This answer also varies greatly from product to product. For example, if I take four not-very-randomly selected measurements that I did myself, I can see the following maximum output levels for a 500 Hz, 0 dB FS sine tone at maximum volume sent to a 31 ohm load (a resistor pretending to be a pair of headphones):

| Brand | Model | Vrms | dBV |

| Lenovo | ThinkPad T420 | 0.317 | -9.98 |

| Apple | iPhone 3Ds | 0.89 | -1.01 |

| Apple | MacBook Pro | 2.085 | +6.38 |

| Sony | CDP-D500 | 6.69 | +16.51 |

In other words, the Sony is more than 26 dB (or 21 times) louder than the ThinkPad, if we’re just measuring voltage. This is a very big difference.

So, as you can see, turning the volume all the way up to 11 on different product results in very different output levels. This is even true if you compare iPod Nano’s of different generations, for example – no two products are the same.

The moral of the story here is: if your headphones aren’t loud enough, it might not be the headphones’ fault.

Part 3: The Details, French Law, and How to Cheat

So much for the obvious things – now we are going to get a little ugly.

Let’s begin the ugliness with a little re-hashing of a previous posting. As I talked about in this posting, your ears behave differently at different listening levels. More specifically, you don’t hear bass and treble as well when the signal is quiet. The louder it gets, the more flat your “frequency response”. This means that, when acoustical consultants are making measurements of quiet things, they usually have to make the microphone signal as “bad” as your hearing at low levels. For example, when you’re measuring air conditioning noise in an office space, you want to make your microphone less sensitive to low frequencies, otherwise you’ll get a reading of a high noise level when you can’t actually hear anything. In order to do this, we use something called an “weighting filter” which is an attempt to simulate your frequency response. There are many different weighting curves – but the one we’ll talk about in this posting is an “A-weighting” curve. This is a filter that attenuates the low and high frequencies and has a small boost in the mid-band – just like you do at quiet listening levels. The magnitude response of that curve is shown below in Figure 1. At higher levels (like measuring the noise level at the end of a runway while a plane is taking off over your head), you might want to use a different weighting curve like a “C-weighting” filter – or none at all.

So, let’s say that you get enough money on Kickstarter to create the Fly-by-Night Headphone Company and you’re going to make a flagship pair of headphones that will sweep the world by storm. You do a little research and you start coming across something called “BS EN 50332-1” and “BS EN 50332-2“. Hmmmm… what are these? They’re international standards that define how to measure how loudly a pair of headphones plays. The procedure goes something like this:

- get some pink noise

- filter it to reduce the bass and the treble so that it has a spectrum that is more like music (the actual filter used for this is quite specific)

- reduce its crest factor so your measurement doesn’t jump around so much (this basically just gets rid of the peaks in the signal)

- do a quick check to make sure that, by limiting the crest factor, you haven’t changed the spectrum beyond the acceptable limits of the test procedure

- play the signal through the headphones and measure the sound pressure level using a dummy head

- apply an A-weighting to the measurement

- calculate how loud it is (averaged over time, just to be sure)

So, now you know how loud your headphones can play using a standard measurement procedure. Then you find out that, according to another international standard called EN 60065 or EN 60950-1 there are maximum limits to what you’re permitted to legally sell… in France… for now… (Okay, okay, these are European standards, but Europe has more than one country in it, so I think that I can safely call them international…)

So, you make your headphones, you make them sound like you want them to sound (I’ll talk about the details of this in a future posting), and then you test them (or have them tested) to see if they’re legal in France. If not (in other words, if they’re too sensitive), then you’ll have to tweak the sensitivity accordingly.

Okay – that’s what you have to do – but let’s look at that procedure a little more carefully.

Step 1 was to get some pink noise. This is nothing special – you can get or make pink noise pretty easily.

Step 2 was to filter the noise so that its spectrum ostensibly better matches the average spectrum of all recorded and transmitted music and speech in the world. The details of this filter are in another international standard called IEC 60268-1. The people who wrote this step mean well – there’s no point in testing your headphones with frequencies that are outside the range of anything you’ll ever hear in them. However, this means that there is probably some track somewhere that includes something that is not represented by the spectral characteristics of the test signal we’re using here. For example: Figure 2, below shows the spectral curve of the test signal that you are supposed to send to the headphones for the test.

Compare that to Figure 3, which shows an analysis of a popular Lady Gaga tune that I use as part of my collection of tunes to make a woofer unhappy. This is a commercially-available track that has not been modified in any way.

As you can see, there is more energy in the music example than there is in the test signal around the 30 – 60 Hz octave – particularly noticeable due to the relative “hole” in the response that ranges between about 70 and 700 Hz.

Of course, if we took LOTS of tunes and analysed them, and averaged their analyses, we’d find out that the IEC test signal shown in Figure 2 is actually not too bad. However, every tune is different from the average in some way.

So, the test signal used in the EN 50332 test is not going to push headphones as hard as some kinds of music (specifically, music that has a lot of bass content),

We’ll skip Step 3, Step 4, and Step 5.

Step 6 is a curiosity. We’re supposed to take the signal that we recorded coming out of the headphones and apply an A-weighting filter to it. Now, remember from above that an A-weighting filter reduces the low and high frequencies in an effort to simulate your bad hearing characteristics at quiet listening levels. However, what we’re measuring here is how loud the headphones can go. So, there is a bit of a contradiction between the detail of the procedure and what it’s being used for. However, to be fair, many people mis-use A-weighting filters when they’re making noise measurements. In fact, you see A-weighted measurements all the time – regardless of the overall level of the noise that’s being measured. One possible reason for this is that people want to be able to compare the results from the loud measurements to the results from their quiet ones – so they apply the same weighting to both – but that’s just a guess.

Let’s, just for a second, consider the impact of combining Steps 2 and 6. Each of the filters in both of these steps reduce the sensitivity of the test to the low and high frequency behaviour of the headphones. If we combine their effects into a single curve, it looks like the one in Figure 4, below.

At this point, you may be asking “so what?” Here’s what.

Let’s take two different pairs of headphones and pretend that we measured them using the procedure I described above. The first pair of headphones (we’ll call it “Headphone A”) has a completely flat frequency response +/- < 0.000001 dB from 20 Hz to 20 kHz. The second pair of headphones has a bass boost such that anything below about 120 Hz has a 20 dB gain applied to it (we’ll call that “Headphone B”). The two unweighted measurements of these two simulated headphones are shown in Figure 5.

After filtering these measurements with the weighting curves from Steps 2 and 6 (above), the way our measurement system “hears” these headphone responses is slightly different – as you can see in Figure 6, below.

So, what happens when we measure the sound pressure level of the pink noise through these headphones?

Well, if we did the measurements without applying the two weighing curves, but just using good ol’ pink noise and no messin’ around, we’d see that Headphone B plays 13.1 dB louder than Headphone A (because of the 20 dB bass boost). However, if we apply the filters from Steps 2 and 6, the measured difference drops to only 0.46 dB.

This is interesting, since the standard measurement “thinks” that a 20 dB boost in the entire low frequency region corresponds to only a 0.46 dB increase in overall level.

Figure 7 shows the relationship between the bass boost applied below 120 Hz and the increase in overall level as measured using the EN 50332 standard.

So, let’s go back to you, the CEO of the Fly-by-Night Headphone Company. You want to make your headphones louder, but you also need to obey the law in France. What’s a sneaky way to do this? Boost the bass! As you saw above, you can crank up the bass by 20 dB and the regulators will only see a 0.46 dB change in output level. You can totally get away with that one! Some people might complain that you have too much bass in your headphones, but hey – kids love bass. And plus, your competitors will get complaints about how quiet their headphones are compared to yours. All because people listening to children’s records at high listening levels hear much more bass than the EN 50332 measurement can!

Of course, one other way is to just ignore the law and make the headphones louder by increasing their sensitivity… but no one would do that because it’s illegal. In France.

Appendix 1: Listen to your Mother!

My mother always told me “Turn down that Walkman! You’re going to go deaf!” The question is “Was my mother right?” Of course, the answer is “yes” – if you listen to a loud enough sound for a long enough time, you will damage your hearing – and hearing damage, generally speaking, is permanent. The louder the sound, the less time it takes to cause the damage. The question then is “how loud and how long?” The answer is different for everyone, however you can find some recommendations for what’s probably safe for you at sites that deal with occupational health and safety. For example, this site lists the Canadian recommendations for maximum exposure time limits to noise in the workplace. This site shows a graph for the US recommendations for the same thing – I’ve used the formula on that site to make the graph in Figure 8, below.

How do these noise levels compare with what comes out of my headphones? Well, let’s go back to the numbers I gave in Part 1 and Part 2. If we take the measured maximum output levels of the 4 devices listed in Part 2, and calculate what the output level in dB SPL would be through the measured sensitivities of the headphones listed in Part 1 (assuming that everything else was linear and nothing distorted or clipped or became unhappy – and ignoring the fact that the headphones do not have the same impedance as the one I used to do the measurements of the 4 devices… and assuming that the measurements of the headphones are unweighted on that website), then the maximum output level you can get from those devices are shown in Figure 9.

So, if you take the calculations shown in Figure 8 and compare them to the recommendations shown in Figure 7, then you might reach the conclusion that, if you set your volume to maximum (and your tune is full-band pink noise mastered to a constant level of 0 dB FS, and we do a small correction for the A-weighting based on the assumption that the 90 dB SPL headphone measurements listed above are unweighted ), then the maximum recommended time that you should listen to your music, according to the federal government in the USA is as shown in Figure 10.

So, if I only listen to full-bandwidth pink noise at 0 dB FS at maximum volume on my MacBook Pro over my BeoPlay H6’s, then the American government thinks that after 0.1486 of a second, I am damaging my hearing.

It seems that my mother was right.

Appendix 2: Why does France care about how loud my headphones are?

This is an interesting question, the answer to which makes sense to some people, and doesn’t make any sense at all to other people – with a likely correlation with your political beliefs and your allegiance to the Destruction of the Tea in Boston. The best answer I’ve read was discussed in this forum where one poster very astutely point out that, in France, the state pays for your medical care. So, France has a right to prevent the French from making themselves go deaf by listening to this week’s top hit on Spotify at (literally) deafening levels. If you live in a place where you have to pay for your own medical care, then you have the right to self-harm and induce your own hearing impairment in order to appreciate the subtle details buried within the latest hip-hop remix of Mr. Achy-Breaky Heart’s daughter “singing” Wrecking Ball while you’re riding on a bus. In France, you don’t.

Appendix 3: Additional Reading

Rodhe and Schwarz’s pdf manual for running the EN 50332 test on their equipment