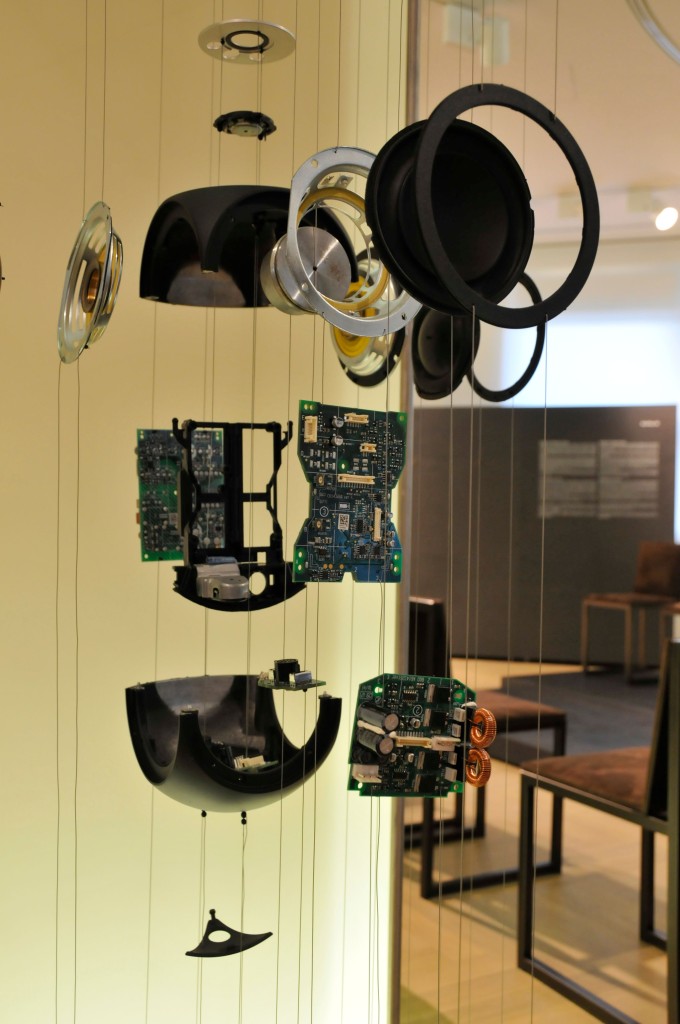

#10 in a series of articles about the technology behind Bang & Olufsen loudspeakers

One question that I am occasionally asked is why the BeoVision 11 is not able to decode audio signals encoded in Dolby TrueHD and DTS HD-Master Audio. I am not able to answer this question. However I can discuss what the implications are with respect to audio and, more specifically, audio quality in a playback system.

Before we start this discussion, let’s get a couple of terms defined:

- LPCM: Linear Pulse Code Modulation. This is what most people call “uncompressed digital audio”. It’s the way digital music is stored on a CD, for example. It’s also the way digital audio is encoded to be able to send it around a computer or digital signal processor and do things like filter it, mix it, or just change the volume. So, at some point, in any system that has any digital audio signals anywhere in it (even if it’s just to change the volume, for example), it will be sending the signals around as LPCM-encoded audio.

- CODEC: COmpression-DECompression. This is a way of encoding the LPCM audio signal so that it takes up less space (or less bandwidth). This is the audio equivalent of converting something to a “ZIP” file – sort of. Some CODEC’s are “lossless” – meaning that, if you take a signal, compress it and decompress it, you get back everything you put in – a bit-for-bit match of the original. (If you didn’t, it wouldn’t be “lossless”). Other CODEC’s are “lossy” – meaning that, when you compress the signal, some stuff is thrown away (this is why audio professionals call lossy CODEC’s like MP3 “data reduction” instead of “audio compression”). Hopefully, the stuff that’s thrown away is stuff you can’t hear – but that debate is best left out of this discussion.

- Bitstream: Some Blu-ray players allow you to choose whether you send the audio data that’s on the disc directly out its digital output, unchanged OR to decode the audio data to LPCM before sending it out. Typically, this shows up in the menus on the player as a choice between sending “bitstream” or “LPCM”. So, for the purposes of this article, I’ll use these two terms as meaning those two things. However, if we’re going to be accurate, this is an incorrect definition, since an LPCM signal is a stream of bits, and therefore is also a bitstream. But now I’m being purposely punctilious…

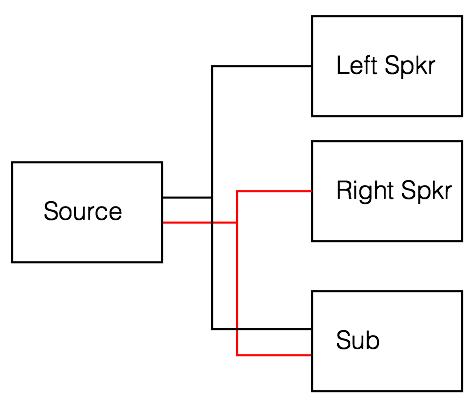

Let’s start this discussion by building a fairly standard home theatre system. We have:

- A Blu-ray player connected to…

- A BeoVision or BeoPlay television or a BeoSystem X OR some other brand’s AVR (Audio Video Receiver) or Surround Processor via an HDMI cable

which is connected to… - A surround sound setup with 5 or 7 main loudspeakers (some of those might be inside the television) and maybe a subwoofer, all connected to the television using power amp, or line level (or PowerLink, for B&O customers) connections

Let’s also assume the following:

- The Blu-ray player is connected to the television’s input with an HDMI cable.

- Both the Blu-ray player and the television (or AVR or Surround Processor) have been certified by Dolby and DTS to decode all the encoding formats in which we’re interested for this discussion

(note that this is not true of all devices in the real world – but we’ll use the assumption for now). - The player does what you tell it to do. In other words, if you set its output to bitstream, it sends the stream of bits that are on the disc it’s playing – and nothing else.

The question is:

What format should I use to send the audio signals from the Blu-ray player to the television? Should I set my Blu-ray player to send a Bitstream to the television and decode the audio signals there? Or maybe it’s better to tell the Blu-ray player to decode the signals and send LPCM to the television.

The answer, unfortunately, is potentially complicated… but let’s look at what the implications are for the two options.

Audio Quality

If you do a search on the web, you’ll find all sorts of answers to this question. Some of the answers are correct, some are partly correct, and some are just plain wrong – actually, some aren’t even wrong.

If you go to a reasonably reputable source such as www.hdmi.org, you’ll read that “There is no inherent difference in quality between Dolby TrueHD/DTS-HD being sent over HDMI as decoded PCM vs. encoded bit stream“. This is, in fact, true (although it’s not the full story). It’s true if your audio is encoded in Dolby TrueHD, Dolby Digital Plus, Dolby Digital, DTS-HD Master Audio, DTS-HD High Resolution Audio, or DTS Digital Surround.

The basic reason for this is that Bang & Olufsen, like every other company that manufacturers audio/video components that support these formats must adhere to a very strict set of regulations that are set by Dolby and DTS in order to receive certification for our products. Also, before we can deliver a new product to our dealers, it must be thoroughly tested by both Dolby and DTS to ensure that we meet their exacting standards for their formats. In other words, a Dolby TrueHD decoder is a Dolby TrueHD decoder – regardless of what the box it’s in looks like.

This means that every device that is certified to decode Dolby TrueHD or DTS-HD Master Audio and convert that format to an uncompressed PCM audio signal (footnote: the “standard” format that is used by the internal Digital Signal Processing (DSP) of the device) does that decoding in the same way. This is true whether the device is a Blu-ray player, a DVD player, an AVR, or surround sound processor. Note that this does NOT, however, mean that a loudspeaker connected to any of these devices will give you the same sound quality – there are many, many other links in the audio chain that have an effect on sound. It merely means that the conversion from a compressed (either lossy or lossless) signal to an uncompressed signal will be identical, regardless of where it’s done.

So, whether:

- you decode in the player and send PCM to the television, OR

- you send the bitstream to the television and decode the signal there,

there is no difference in audio quality

Now, you might tell me “But I went to a user forum and saw a posting from a person who did a test with his Acme Blu-ray player and his Flybynight AVR and he reports that he can EASILY hear the difference in quality in the audio when he changes from LPCM to Bitstream on his player.” This may, in fact, be true. However, the reason that there is an audible difference is not due to one of the devices having a better decoder or some very minor issue like jitter on the HDMI signal. There are at least two very basic and very simple explanations as to why this might happen.

- The first is a simple level difference. If you do an ABX test of a system where A or B is 1 dB louder than the other, but are identical in every other aspect, your listening test subjects will have no difficulty identifying what X is. (An ABX test is one where you can listen to 3 things – an “A”, a “B” and an “X”. “X” is guaranteed to be identical to either “A” or “B” (which are guaranteed to be different). Your task is to identify whether “X” matches “A” or “B”. So, if the Bitstream is just 1 dB (or less!) louder than than the LCPM version, then you will hear a difference – although you might not notice that the difference is a simple loudness. It’s likely that you will perceive the louder one as just being “better”.

- The second is that some AVR’s can be set to apply different post processing to LPCM signals than they do to signals decoded internally. So, it’s possible that there could be something as simple as a bass or treble difference between the two signals – but it could be something as complicated as a lot of spatialisation and reverberation with a big EQ curve – or anything in-between. So, in this case, the difference between Bitstream and LPCM coming in from the player is in the post-processing differences in the AVR.

However, as I said, this is not the full story. Let’s look at more pieces of the puzzle to see what the differences are.

Audio during fast-forward / fast-rewind

Different players behave differently when you are fast-forwarding or fast-rewinding through a disc. If you are sending an encoded bitstream from the player, many devices will not deliver any audio when you are moving through your disc at a higher speed. This behaviour is different from brand to brand and model to model. However, in many cases, these same players WILL deliver audio if the output is set to PCM.

Listening Levels

In a theory, there should be no difference in audio levels (i.e. how loud the signals are) caused by moving from a decoder inside your player to one inside your television. However, as I mentioned above, this world isn’t perfect – and one of the results of that imperfection is that there may, indeed, be a difference in level caused by switching from a bitstream to a PCM output from the Blu-ray player. If this does happen, then it’s likely because of some extra (or different) post-processing that is happening to the signal(s).

Mixing of Extra Audio Channels

One of the cool features of DVD and Blu-ray (that I, personally, never use) is that you can watch a movie whilst listening to the director (or someone else) talk about the movie – a feature usually called “Commentary” or something like that. This is fun, because there’s nothing that can make IronMan 3 more interesting than hearing about how someone accidentally spilled a cup of coffee on their computer keyboard while they were rendering a 3D CGI version of an Audi falling into the ocean.

In order for this feature to work, the system has to take the audio signals for the movie and mix in an additional audio channel. However, some players are not able to mix these two sounds together if they’re outputting a bitstream. They can only do it if they’re decoding the movie AND the commentary separately, and then mixing the two LPCM streams. Of course, to then re-encode the result, just to send out a bitstream would be silly.

Another example of these extra sounds that may not make it through a bitstream output are the “clicks” or cute noises that are assigned to menu items.

So, with some players, if you want to hear these extra sounds, you’ll have to output a decoded LPCM stream.

Channel Allocation and Routing

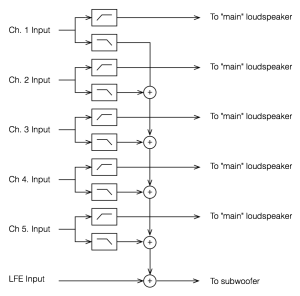

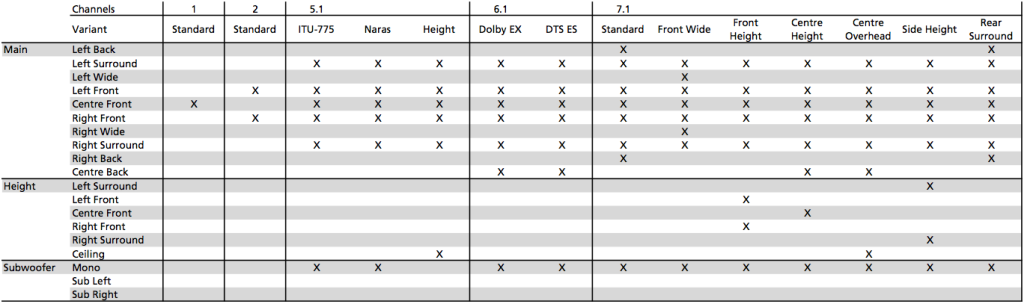

This is where things get a little debatable. Usually, when people say “5.1” they mean the following channel allocations (in no particular order)

- Left Front

- Centre Front

- Right Front

- Left Surround

- Right Surround

- LFE

And, when they say “7.1” they mean

- Left Front

- Centre Front

- Right Front

- Left Surround

- Right Surround

- Left Back

- Right Back

- LFE

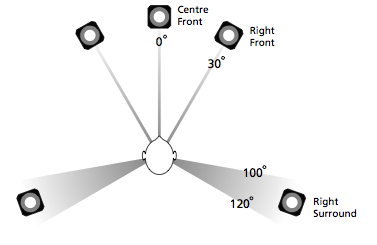

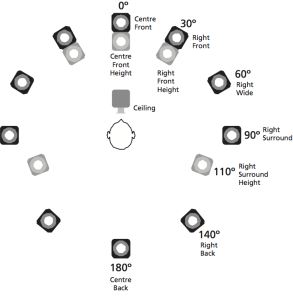

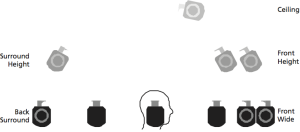

We’ll call these the “standard audio channel allocations” for this article. However, as I talked about it a previous article, “7.1” actually has seven “legal” variants that include non-standard audio channel allocations like front-wide and height channels.

Let’s assume, temporarily, that you have a disc that has some audio channels on it that should be routed to, say, a ceiling loudspeaker. The question is: “IF your surround processor has a “ceiling speaker” output, and IF there is a “ceiling” audio channel on the disc, can the signal get to the correct loudspeaker?” The answer is complicated. . .

Firstly, the question is “how can the disc ‘know’ that the audio channel is a ‘ceiling’ channel?” Well, both Dolby TrueHD and DTS HD-Master Audio (for example) have the option to include “metadata” (this is a fancy word meaning “data about the data” – or, in other words “information about the audio signals”) that can tell the decoder something like “audio channel #6 should be sent to a ceiling loudspeaker”. Other CODEC’s (like Dolby Digital, for example) do not have the possibility to have non-standard audio

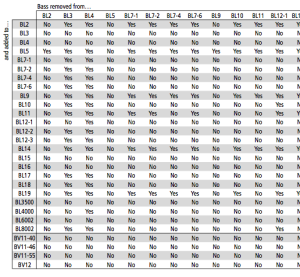

Secondly, the question is “if the player decodes the signal to LPCM, and its decoder knows that the channel should be routed to the ceiling, can it ‘tell’ the surround processor via the HDMI metadata where to route the signal?” The answer to this question is dependent on the version numbers of the HDMI transmitter in the player and the HDMI receiver in the surround processor. If you are using HDMI 1.3 or earlier, then you cannot have non-standard channel allocations with an LPCM signal. If you have HDMI 1.4 or higher (for both the transmitter and the receiver) you might be able to get the correct metadata across from device to device. (if you look here, you can see that, if your HDMI transmitter or receiver is version 1.2 or earlier, then you cannot send the Dolby or DTS lossless codec’s – so this will also not work for non-standard channel allocations.)

So, the only way to guarantee that your complete system can support non-standard audio channel allocations (assuming that your surround processor has the ability to output them) is to send a bitstream from the player and decode at the end of the chain.

However, the question to ask after you’ve answered all of that is “how many commercially-available recordings include non-standard audio channel allocations?” The answer to this, as far as I’ve been able to figure out is “none”. (If you know of any examples of this that prove me wrong, please leave a note in the “replies” – I’m looking for materials! But read the rest of this paragraph first…) Of course, there are SACD’s where the LFE channel should be directed to a height channel – but SACD’s don’t include metadata to tell the player about the routing. Dolby ProLogic IIz and dts Neo:X have height channel outputs, but that’s a different system than a CODEC with discrete output channels. There are some other formats like Imax, Auro3D, and Dolby Atmos that use height channels, but they’re not available in consumer media.

So, this, at least for now, is an solution without a problem.

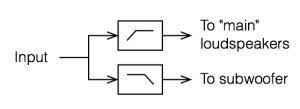

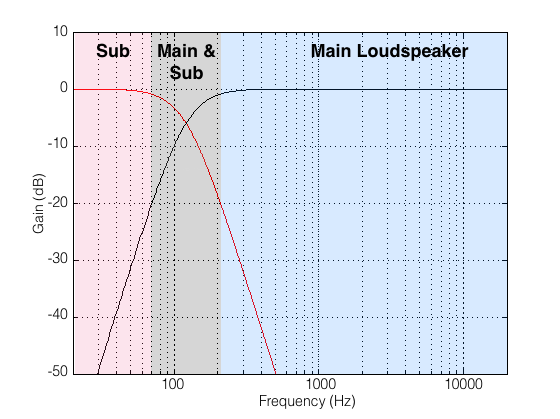

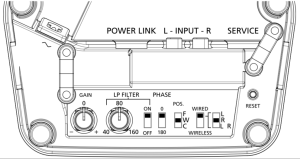

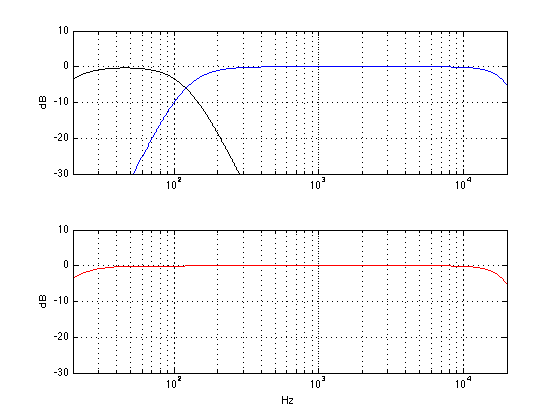

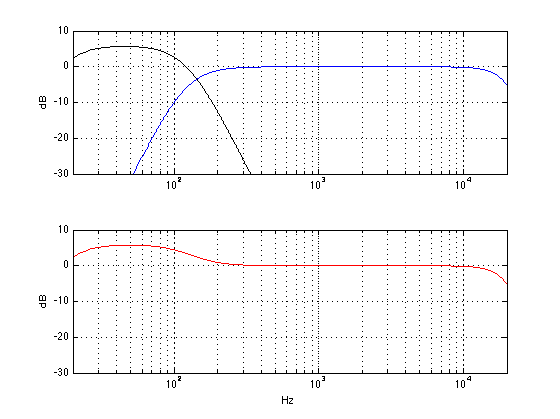

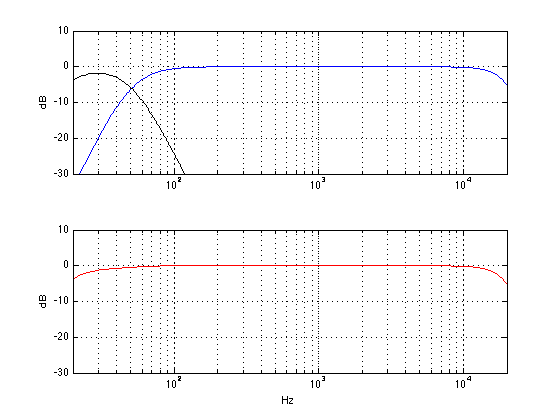

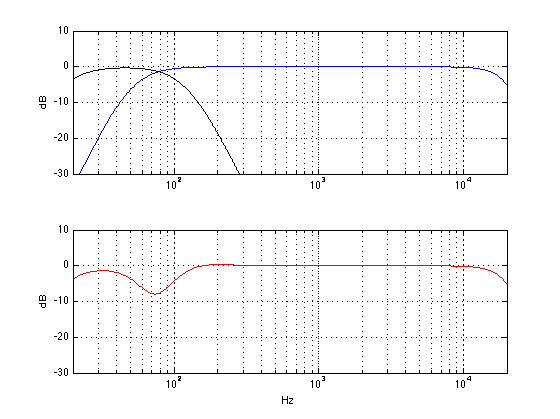

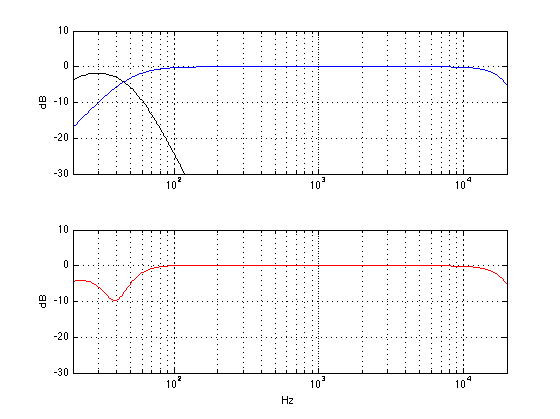

Bass Management

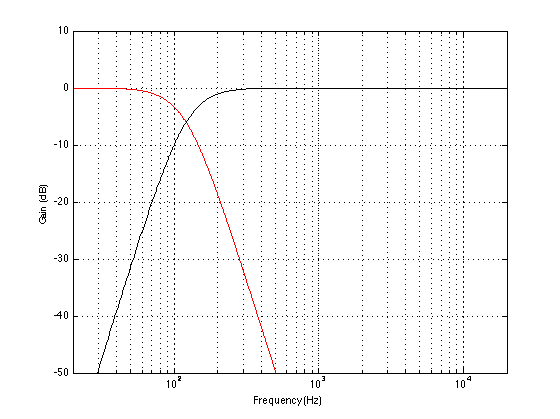

Some Blu-ray players (and even some good ol’ DVD players) include a bass management system that will filter the bass out of the main channels and add it to the LFE output.

IF your player can do this, then:

- it can only do it to a decoded signal – so the bass management in the player will not work with a bitstream output

- you should be sure that it is doing it (if you want it to do so) or that it is not doing it (if you don’t)

Latency

Some forum discussion groups have highlighted an issue with some specific players that exhibit lip-synch problems when they decode the signal to LPCM internally. The few comments about this that I have read in these fora indicate that switching the player’s output to bitstream appears to fix this problem. However, this should be considered a “work-around” for a bug in the player’s software. There should be no difference in synchronisation of sound and picture whether you’re decoding in the player or the surround processor. If there is a lip synch problem, then the people that made the player haven’t done their jobs properly.

Conclusion

So, to wrap up: the big things to remember here are that,

- in any audio playback system, audio that is stored (and/or transmitted) in a CODEC has to be converted to LPCM somewhere

- there is no difference in quality of decoder – in other words a Dolby (or DTS) decoder in one device won’t be better than a Dolby (or DTS) decoder in another device. If it were, then Dolby (or DTS) would not have approved one of them

- there are some issues not related to audio quality that are affected by the location of the decoder in an audio chain, but these are typically very small (or even non-existent) issues for almost all consumers

Finally, I didn’t talk about jitter – which is term that a lot of people throw around as being one of those evils in every audio system where you can place all of your blame for everything that is bad about the system. This puts it in a league with things like “society“, “television“, “Canada“, and “The Boogie“. I’ll talk about that sometime in the future.

One last thing

Everything I’ve said above is only true for an HDMI connection. If you have an S/P-DIF or TOSLINK connection, then the discussion will be quite different, since you cannot have more than 2 channels of LPCM-encoded audio on those systems – so the only way to get multichannel audio through it is to use a lossy CODEC. So, if you have a multichannel source and you decode to LPCM before sending it out on S/P-DIF or TOSLINK, you will wind up with a 2.0 channel downmix.