#17 in a series of articles about the technology behind Bang & Olufsen loudspeakers

This week, instead of talking about what is inside the loudspeakers, let’s talk about what I listen for when sound is coming out of them. Specifically, let’s talk about one spatial aspect of the mix – where instruments and voices are located in two-dimensional space. (This will be a short posting this week, because it includes homework…)

Step 1: Go out and buy a copy of Jennifer Warnes’s album called “Famous Blue Raincoat: The Songs of Leonard Cohen” and play track 2 – “Bird on a Wire”.

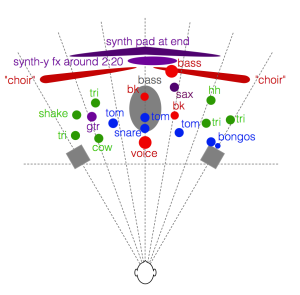

Step 2: Close your eyes and really concentrate on where the various voices and instruments are located in space relative to your loudspeakers. If you hear what I hear, you’ll hear something like what I’ve tried to represent on the map shown in the figure below.

I’ve used some colour coding, just to help keep things straight:

- Voices are in Red

- Drums are in blue

- Metallic instruments (including cymbals) are in green

- Bass is gray

- Synth and Saxophone are in purple

Note that Jennifer sings her own backup vocals, so the “voice”, and the two “bk” (for backup – not Burger King) positions are all her. It also sounds like she’s singing in the “choir” on the left – but it’s hard for me to hear exactly where she is.

Whenever I’m listening to a pair of loudspeakers (or a car audio system, or the behaviour of an upmix algorithm) to determine the spatial properties, I use this map (which I normally keep in my head – not on paper…) to determine how things are behaving. The two big questions I’m trying to answer when considering a map like this revolve around the loudspeakers’ ability to deliver the (1) accuracy and (2) the precision I’m looking for. (Although many marketing claims will use these words interchangeably, they do not mean the same thing.)

The question of accuracy is one of whether the instruments are located in the correct places, both in terms of left and right, but also in terms of distance. For example, the tune starts with a hit on the centre tom-tom, followed immediately by the bigger tom-tom on the left of the mix. If I have to point at that second, deeper-pitched tom-tom – which direction am I pointing in? Is it far enough left-of-centre, but not hard over in the left loudspeaker? (This will be determined by how well the loudspeakers’ signals are matched at the listening position, as well as the location of the listening position.) Secondly, how far away does it sound, relative to other sound sources in the mix? (This will be influenced primarily by the mix itself.) Finally, how far away does it sound from the listening position in the room? (This will be influenced not only by the mix, but by the directivity of the loudspeakers and the strength of sidewall reflections in the listening room. I talked about that in another blog posting once-upon-a-time.)

The question of precision can be thought of as a question of the size of the image. Is it a pin-point in space (both left/right and in distance)? Or is a cloud – a fuzzy location with indistinct edges? Typically, this characteristic is determined by the mix (for example, whether the panning was done using amplitude or delay differences between the two audio channels), but also by the loudspeaker matching across the frequency range and their directivity. For example, one of the experiments that we did here at B&O some years ago showed that a difference as small as 3 degrees in the phase response matching of a pair of loudspeakers could cause a centrally-located phantom image to lose precision and start to become fuzzy.

Some things I’ve left out of this map:

- The locations of the individual voices in the “choir”

- Extra cowbells at around 2:20

- L/R panned cabasa (or shaker?) at about 2:59

- Reveberation

Some additional notes:

- The triangles on the right side happen around 2:12 in the tune. The ones on the left come in much earlier in the track.

- The “synth-y fx around 2:20” might be a guitar with a weird modulation on it. I don’t want to get into an argument about exactly what instrument this is.

- I’ve only identified the location of the bass in the choir. There are other singers, of course…

You might note that I used the term “two-dimensional space” in the beginning of this posting. In my head, the two dimensions are (1) angle to the source and (2) distance to the source. I don’t think in X-Y cartesian terms, but Polar terms.

An important thing to mention before I wrap up is that this aspect of a loudspeaker’s performance (accuracy and precision of phantom imaging) is only one quality of many. Of course, if you’re not sitting in the sweet spot, none of this can be heard, so it doesn’t matter. Also, if your loudspeakers are not positioned “correctly” (±30 degrees of centre and equidistant from the listening position) then none of this can be heard, so it doesn’t matter. And so on and so on. The point I’m trying to make here is that phantom image representation is only one of the many things to listen for, not only in a recording but also when evaluating loudspeakers.