#74 in a series of articles about the technology behind Bang & Olufsen loudspeakers

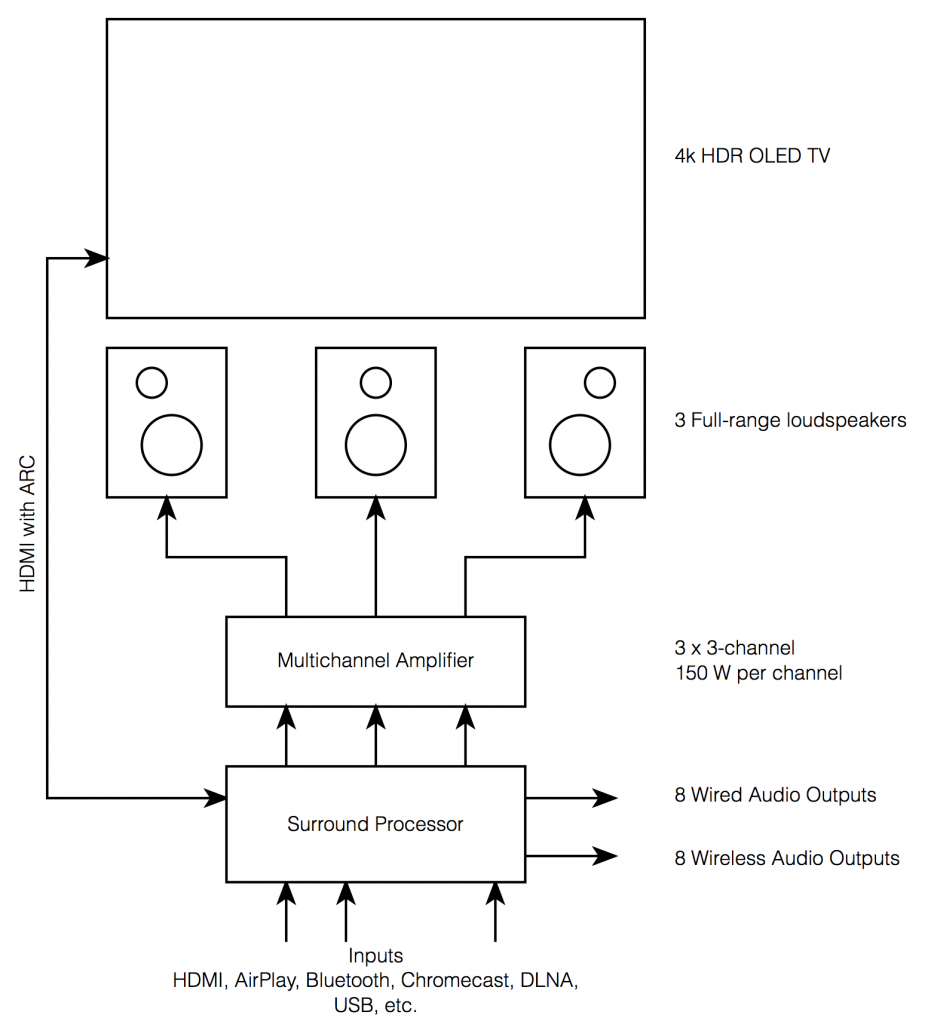

Bang & Olufsen recently released its latest television called BeoVision Eclipse. If you look around the web for comments and reviews, one of the things you’ll come across is that many people are calling it a “soundbar” which is only partly true, which is why B&O calls is a SoundCenter instead.

In order to explain the difference, let’s start by looking at what basic components you would need to buy in order to have the equivalent capabilities of the Eclipse.

- 4K HDR OLED screen

- Multichannel audio

- Surround processor + Three-channel amplifier with 150 watts per channel OR

- Audio-Video Receiver (AVR) with 150 watts per channel

- 19 discrete audio output channels

- 1- to 16.5- up/down mixing, dynamic with signal

- User-configurable dynamic output routing

- Intelligent Bass Management

- Three full-range loudspeakers

- DLNA, Streaming, and multiroom compatible

This is shown in the block diagram in Figure 1 – and it’s important to note that this just an overview of the capabilities – not a thorough list.

I’m from the acoustics department, so I’m not going to talk about the video portion of the Eclipse – it’s best to stick with what I know…

Built-in loudspeakers

From the outside, the Eclipse obviously has 3 woofers, each driven by its own 100 W amplifier as well as 2 full range drivers and a tweeter, each of which is individually powered by its own 50 W amplifier. Those 6 amplifiers are each fed by its own Digital to Analogue Converter (or DAC).

The total result of this is a discrete 3-channel loudspeaker array (which some might label a “soundbar”) that is fully-active, and with all processing (such as crossovers, filtering, and ABL, as described in this posting) performed in the Digital Signal Processing (or DSP).

When it leaves the factory, those three channels are preset to act as the Left Front (Lf), Centre Front (Cf), and Right Front (Rf) audio channels, however, these can be changed by the user, as I’ll describe below.

External loudspeakers

The BeoVision Eclipse, like all other current BeoVision televisions includes both wired and wireless outputs for connection to external loudspeakers for customers who either want to have a larger multichannel system, or wish to have the option to upgrade to one in the future.

The Eclipse has 8 wired outputs (on 4 Power Link connections – each of which has 2 discrete audio channels) and 8 wireless outputs (using Wireless Power Link).

This means that, in total, you can have up to 19 loudspeakers delivering signals in a large multichannel surround system (8 wired + 8 wireless + 3 internal). However, even if you have all of those loudspeakers connected, you don’t have to use all of them all of the time…

Audio signal processing

There are many Surround Processors and Audio-Video Receivers (or AVR’s) in the world. These have the primary job of receiving a signal (say, from an HDMI input) and decoding it, splitting it up into the video and audio outputs. The audio channels in the signal are then sent to the appropriate output. However, with almost all Surround Processors and AVRs, the output channel routing is fixed. In other words, the left surround output of the AVR always goes to the same loudspeaker, in the left surround position.

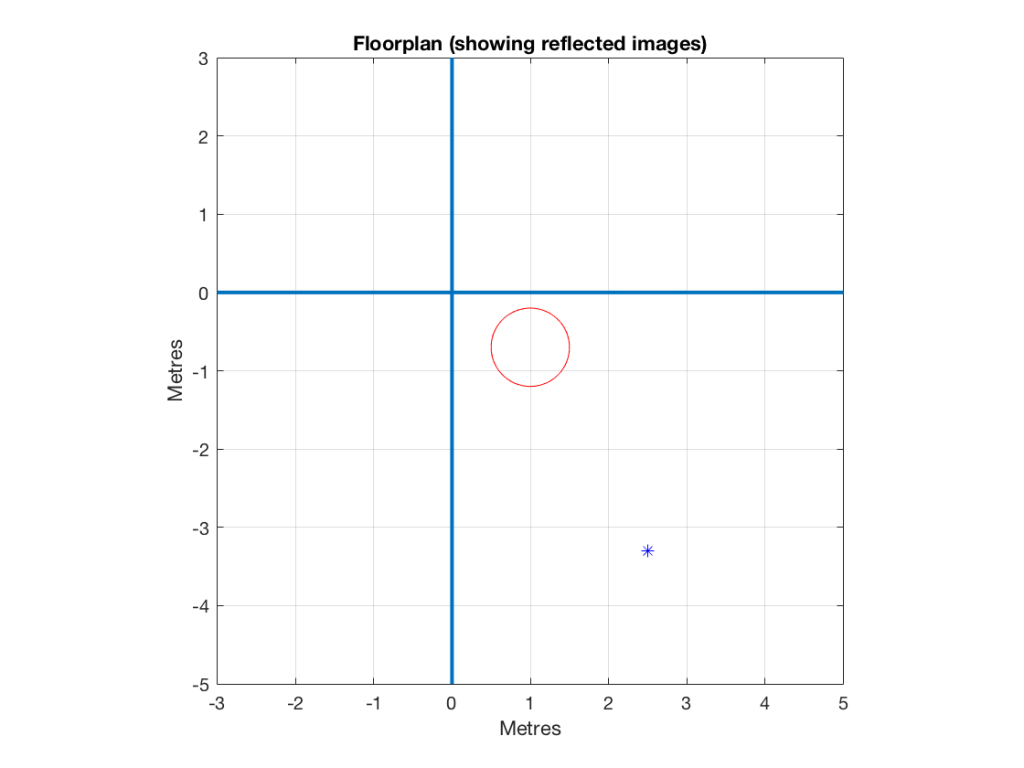

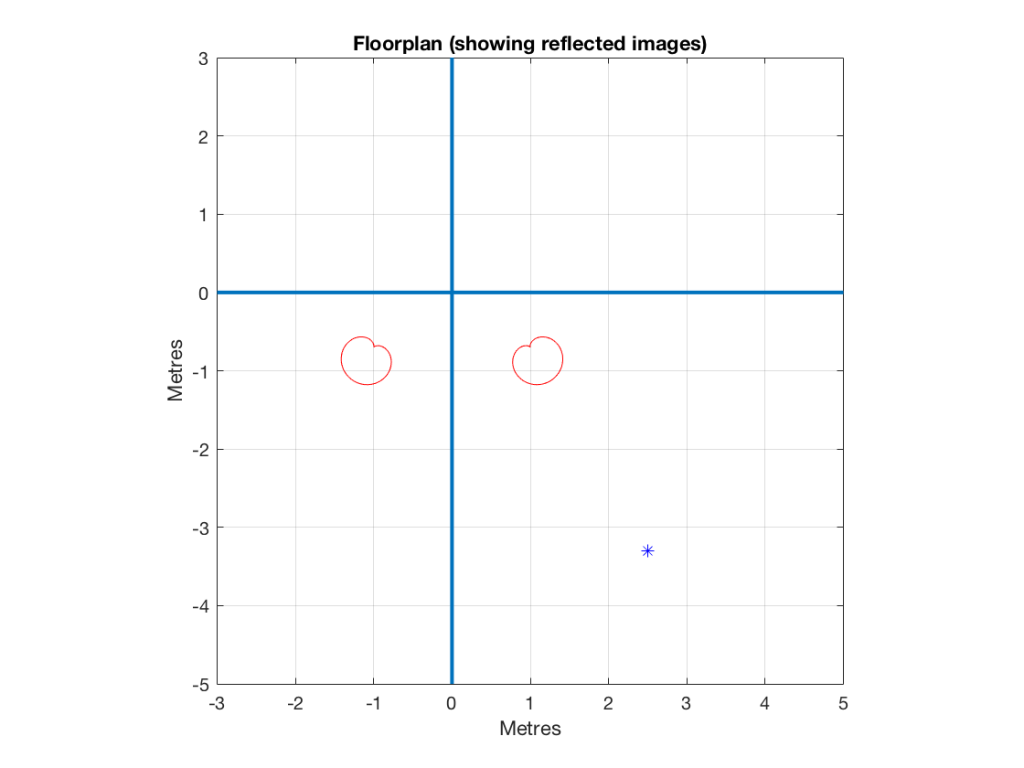

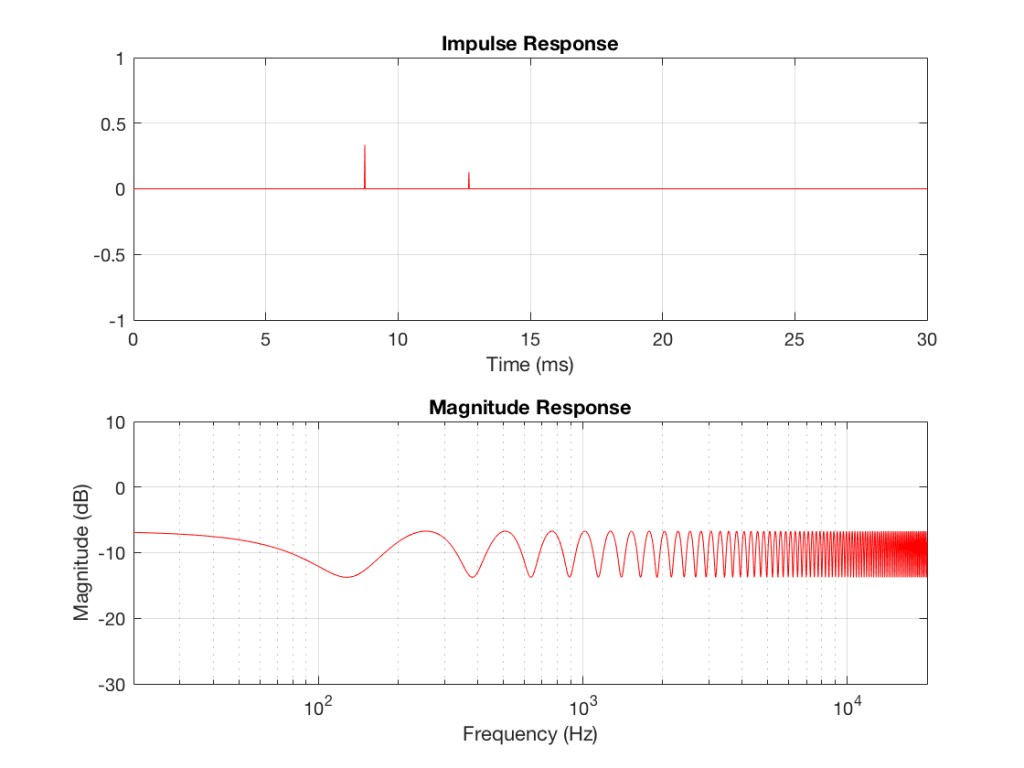

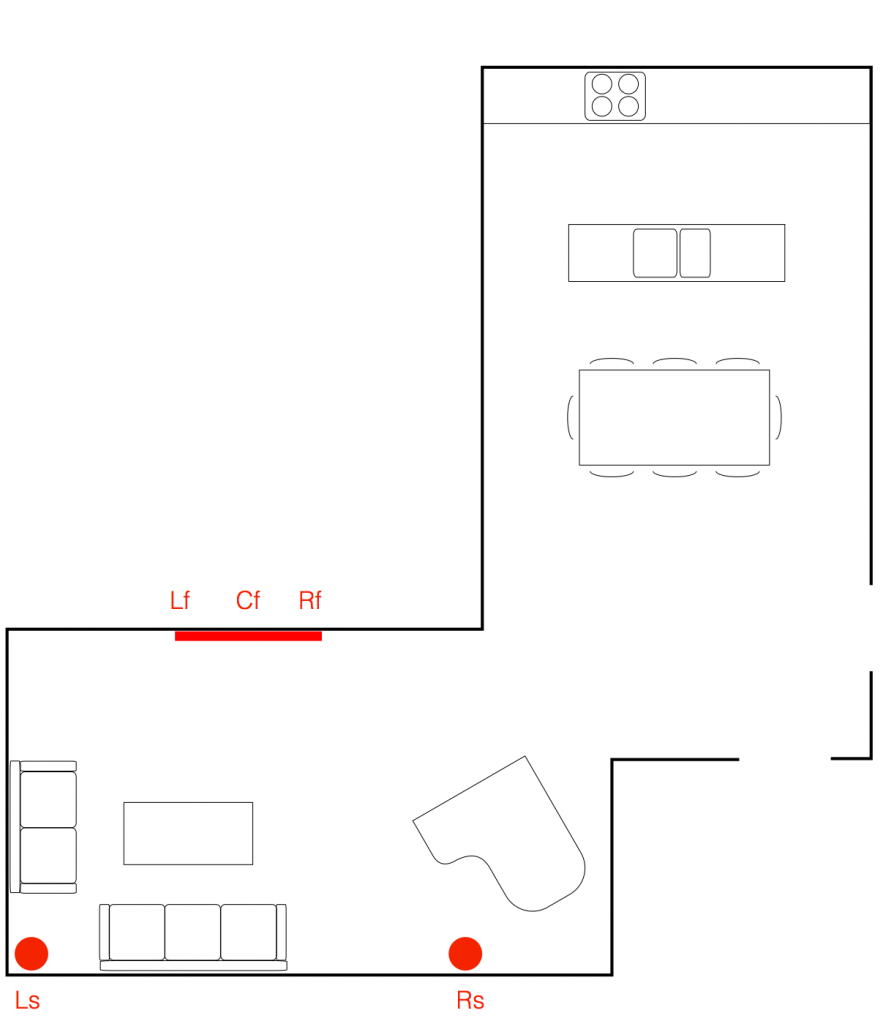

In a Bang & Olufsen television like the BeoVision Eclipse, this routing is not fixed. So, for example, if you connect two extra external loudspeakers, you might choose to use them as the Left Surround (Ls) and Right Surround (Rs) outputs, with the three internal loudspeakers providing the Lf, Cf, and Rf channels. This is shown in Figure 2.

This configuration would be saved as a “Speaker Preset” and labelled as you wish (for example, “surround sound”) and even set as a default configuration for the inputs that you wish (the Blu-ray player, for example).

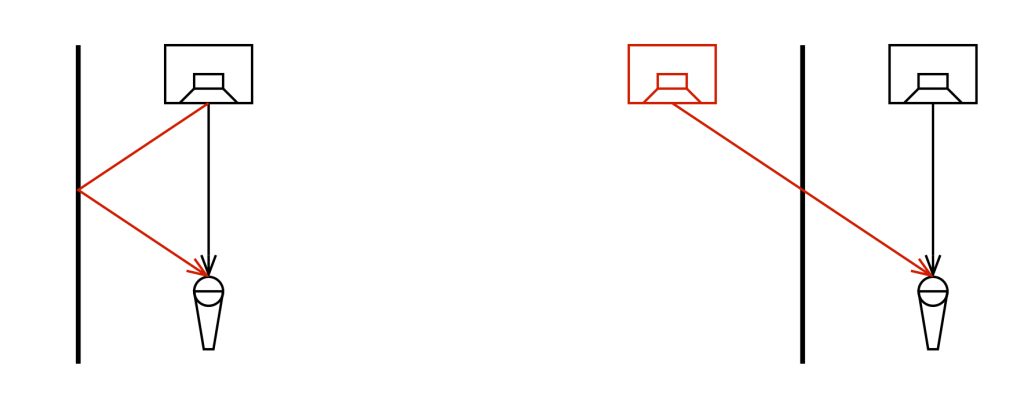

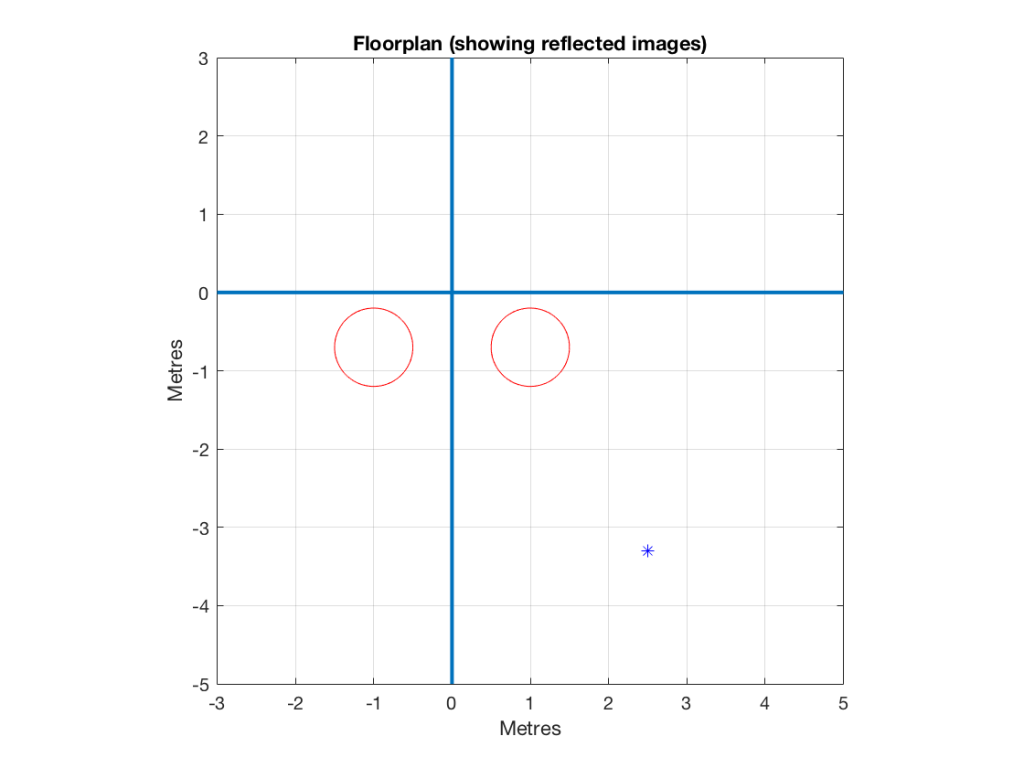

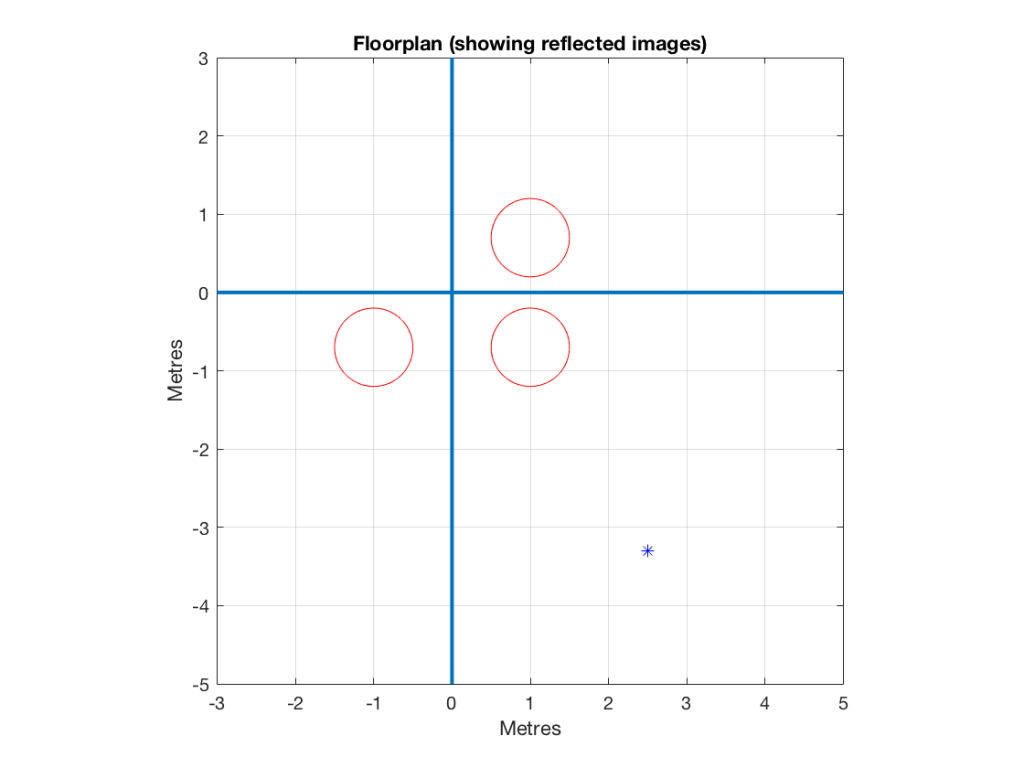

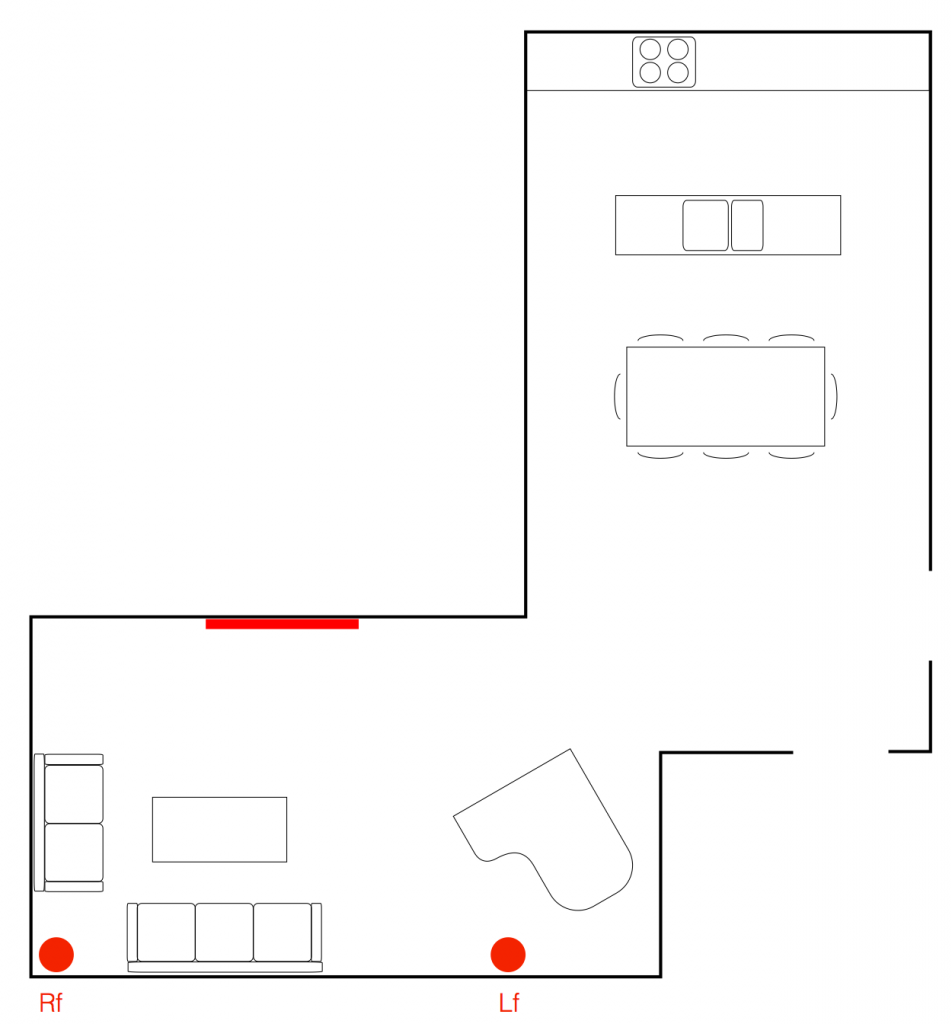

However, you aren’t stuck with this setup. Let’s say, for example, that, when you have dinner, you would like to use the external loudspeakers ONLY as a stereo pair, as is shown below in Figure 3.

Now, the external loudspeakers have changed their Speaker Roles. They were Left Surround and Right Surround in Figure 2 – but now they’re Right Front and Left Front. This configuration can be saved as another Speaker Group, and labelled something like “Dinner Music” for example.

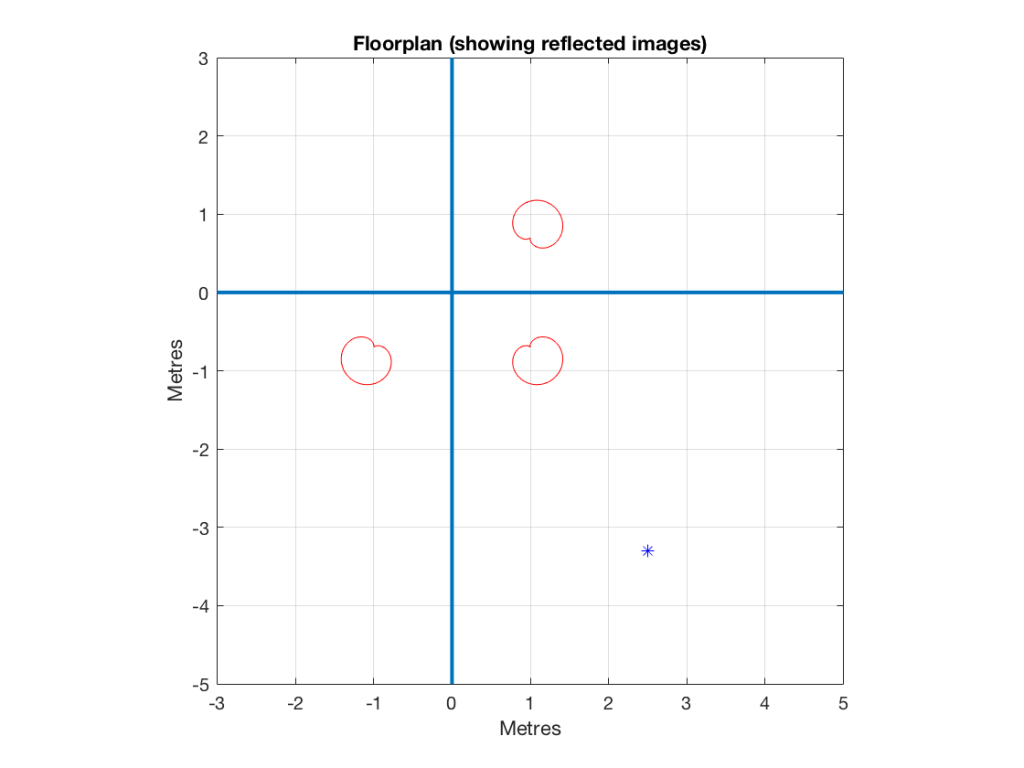

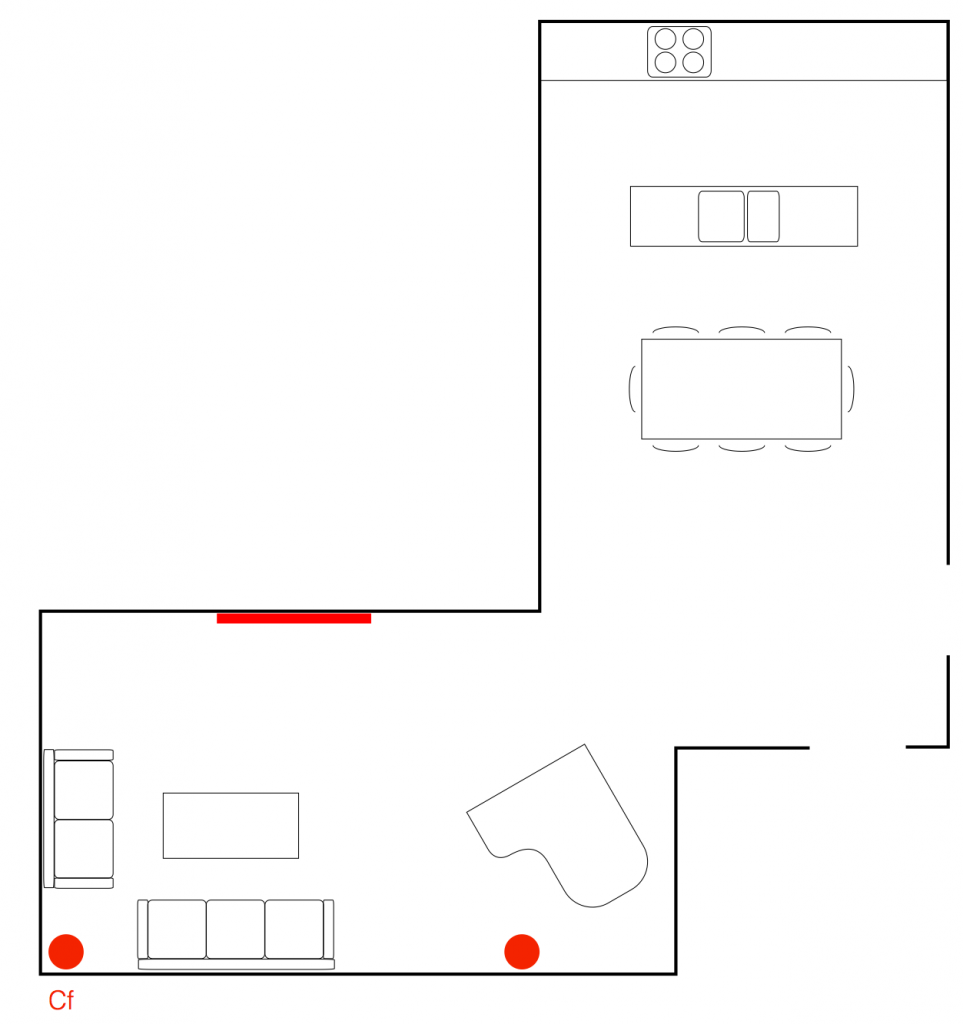

You could also do something completely non-intuitive – for example a configuration for watching the evening news, where you only need to hear the dialogue, but everyone else in the house is either asleep, or not interested in current affairs. Then you can route the Centre Front channel to the closet loudspeaker only, as shown below in Figure 4.

This can be saved as another Speaker Group, called “Speech – Night Listening” for example.

It should also be noted that there are no rules applied to the distribution of Speaker Roles in a Speaker Group. So, for example, if you wanted to have 19 loudspeakers, all playing the Left Surround channel, the TV will let you do this. I’m not suggesting that this is a good idea – I’m merely saying that the TV will not stop you from doing this…

Of course, when you create a Speaker Group, you not only define the various roles of the loudspeakers, you also set their Speaker Levels and Speaker Distances to ensure that the levels and time-of-arrivals are all aligned as you require for your configuration.

Update: I just made a new Speaker Group on a system with a BeoVision Eclipse and a pair of BeoLab 90’s that I thought might make an interesting addition to this section. The Eclipse Speaker Group was created such that all connected loudspeakers (internal and external) were set to have a Speaker Role of NONE. This basically means that the TV uses no loudspeakers. You may wonder why this is a useful Speaker Group. The reason is that I was using the Eclipse as an external monitor for a computer, but I wanted to listen to music from the BeoLab 90’s from another device (which is connected to their S/P-DIF Coaxial input). So, the Eclipse turns off the BeoLab 90’s, which “frees them up” to automatically switch to the S/P-DIF input.

Up-/down-mixing capabilities

Internally, the Eclipse, like the BeoVision 11, Avant, Horizon, and 14, can create up to a 16-channel upmix of all signals that come into it, using the True Image algorithm. However, if your input channel mapping matches your output, then the upmixer does nothing. This decision (whether to upmix, downmix, or do nothing) is continually made on-the-fly. So, for example, let’s say that you have a 5.1-channel loudspeaker configuration with 5 main loudspeakers and one subwoofer. You start by playing 2-channel stereo music from a USB stick and the True Image algorithm will upmix the 2 input channels to your 5 output channels, and also bass mange the low frequency content to the subwoofer. You then switch to watch a DVD with a 5.1-channel signal, and True Image will connect the 6 input channels to the 6 loudspeakers directly without doing any interim spatial processing. Then, you change to a Blu-ray disc with 7.1-channel audio content and True Image will downmix the 8 incoming channels to your 6 loudspeakers.

All of this happens automatically, and is also true if you switch Speaker Groups. So, if you start watching the 5.1-channel DVD with a 5.1-channel Speaker Group, then True Image will pass the signals through. If you then switch to the 2-channel Speaker Group, True Image will automatically start downmixing for you (rather than just not playing the “missing” output channels).

Of course, if you’re a purist, then the True Image algorithm can be disabled, and the incoming audio channels can be just routed to their respective outputs directly. However, this means that if your input format does not match your output format, then either you’ll not hear some audio channels (if you have more input channels than output channels) OR some loudspeakers will not play audio (if you have fewer input channels than output channels).

Intelligent bass management

If all of the external loudspeakers that you’ve connected to the BeoVision Eclipse are Bang & Olufsen products, then you simply tell the television which loudspeaker models you have (if they’re connected wirelessly, then this happens automatically) and the TV will automatically decide whether each loudspeaker should be bass-managed or not. This is because the TV is programmed with the bass capabilities of all Bang & Olufsen loudspeakers in the current portfolio – and many legacy products. This means that the TV “knows” which speakers can play the loudest bass – so it will automatically configure itself for each Speaker Group, ensuring that your bass is re-routed to the most capable loudspeakers.

Of course, this can be over-ridden in the user menus. So, if you wish to disable Bass Management, you can do so. However, you can also create extreme cases where you send the bass managed signal to all loudspeakers. This is not necessarily a good idea – nor will it necessarily give you the most bass (due to possible phase differences between the loudspeakers, for example) – however, you can do it if you wish.

If the external loudspeakers are not Bang & Olufsen products, then you simply choose “Other” as your Speaker Connection (or speaker type) in the menus, and the TV will know that it cannot make automatic decisions about the bass management – so you’ll have to configure this yourself.

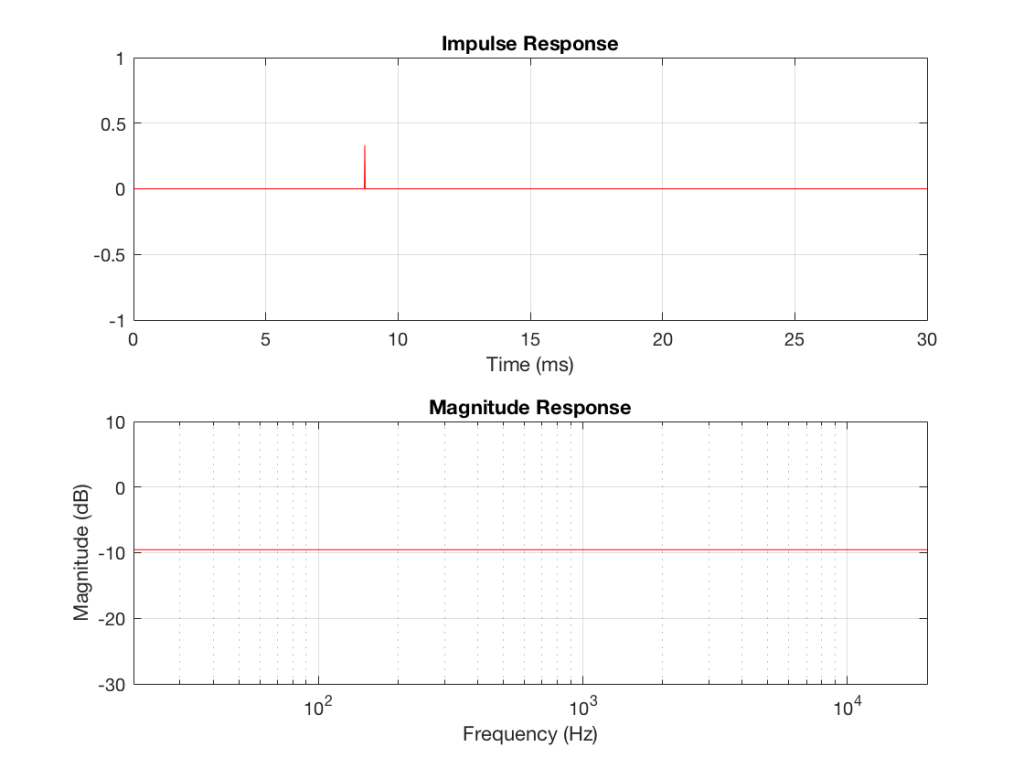

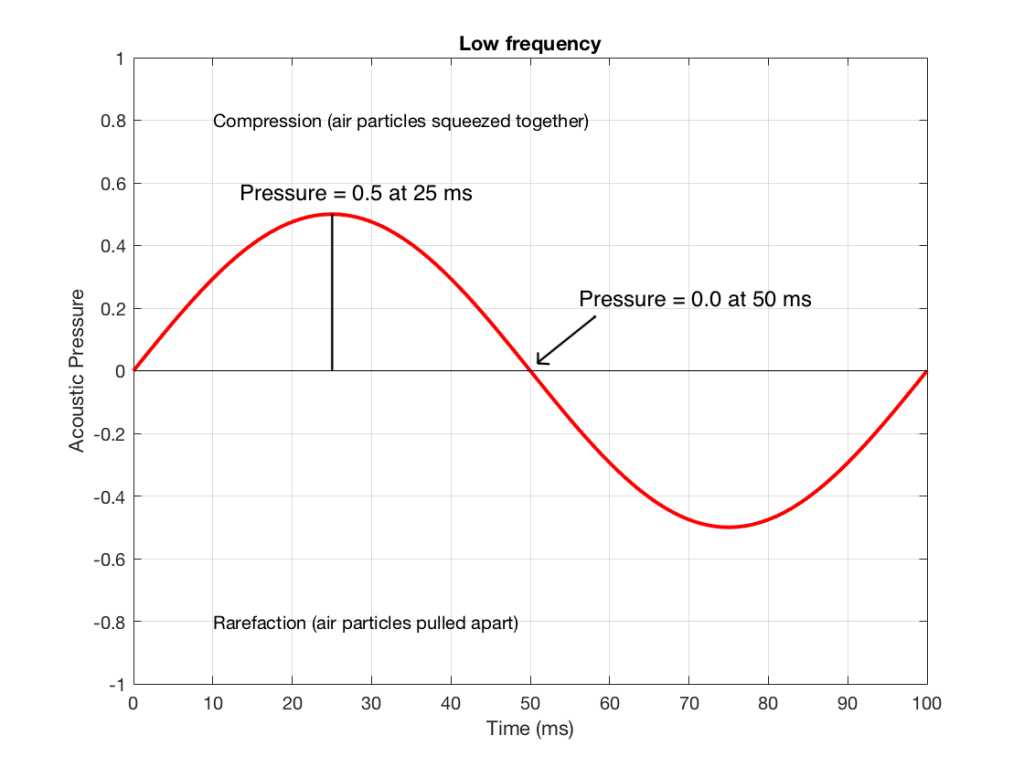

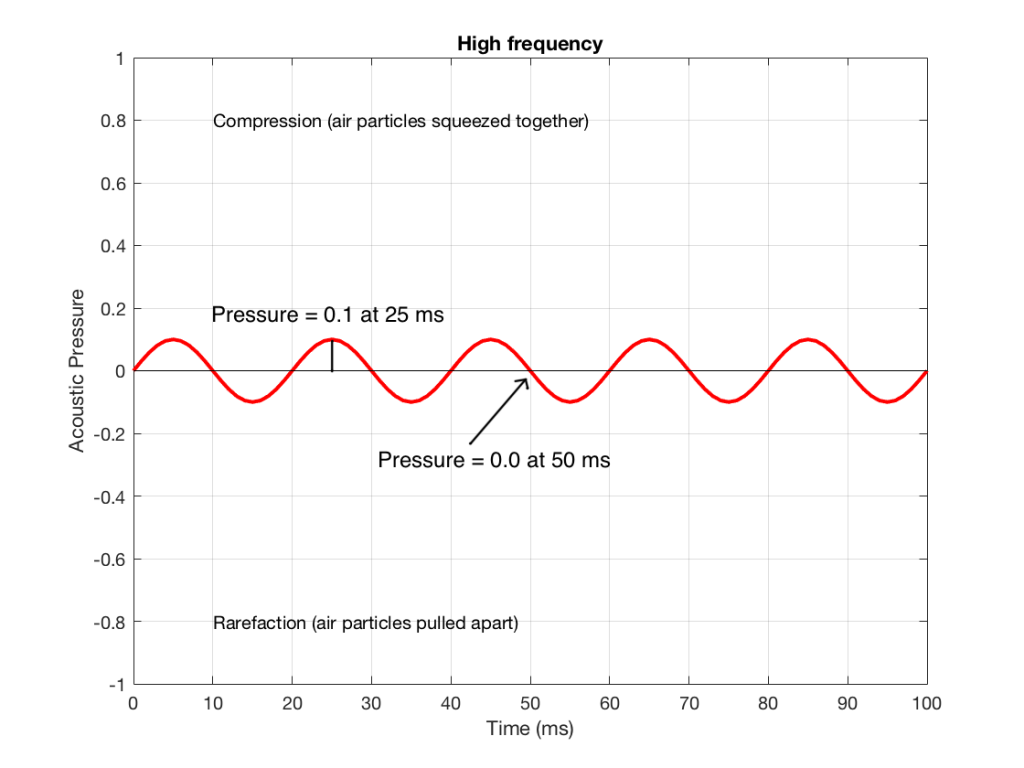

Automatic Latency Management

Different Bang & Olufsen loudspeakers have different “latencies”. (The latency of a loudspeaker is the time it takes for the signal to go through it – from the electrical input to the acoustical output.) For some older products (like the BeoLab 3, for example) then the latency is 0 ms, because it is an analogue loudspeaker. For some others, it is between 2.5 and 5 ms (depending on the particular loudspeaker). The BeoLab 50 and BeoLab 90 each have two latency modes: either 25 ms or 100 ms, depending on how they are configured.

In order to ensure that all of these different loudspeakers can “live together” in a single surround system (and also in a multiroom configuration with other products in your house), the TV must also “know” the latencies of the various loudspeakers that are connected to it.

In addition, the BeoVision Eclipse can “tell” the BeoLab 50 and 90 to change latency settings on-the-fly to optimise the configuration to ensure lip sync. (Note that, in order for this to happen, the BeoLab 50 and 90 must be set to “Auto” latency mode, allowing them to be switched by the TV.)

Other Features

As I said at the top, I’m concentrating on the audio and acoustic features of the BeoVision Eclipse. There are many aspects of the LG screen that I won’t discuss here. In addition, there are a multitude of video and audio input options and built-in sources (like Netflix, Amazon, Google Chromecast, Apple AirPlay, and so on…) which I also won’t go through.

Finally, of course, it goes without saying that in order to control all of this you only need to have one remote control sitting on your coffee table…

For more information

What’s So Great About Active Loudspeakers?