Author: geoff

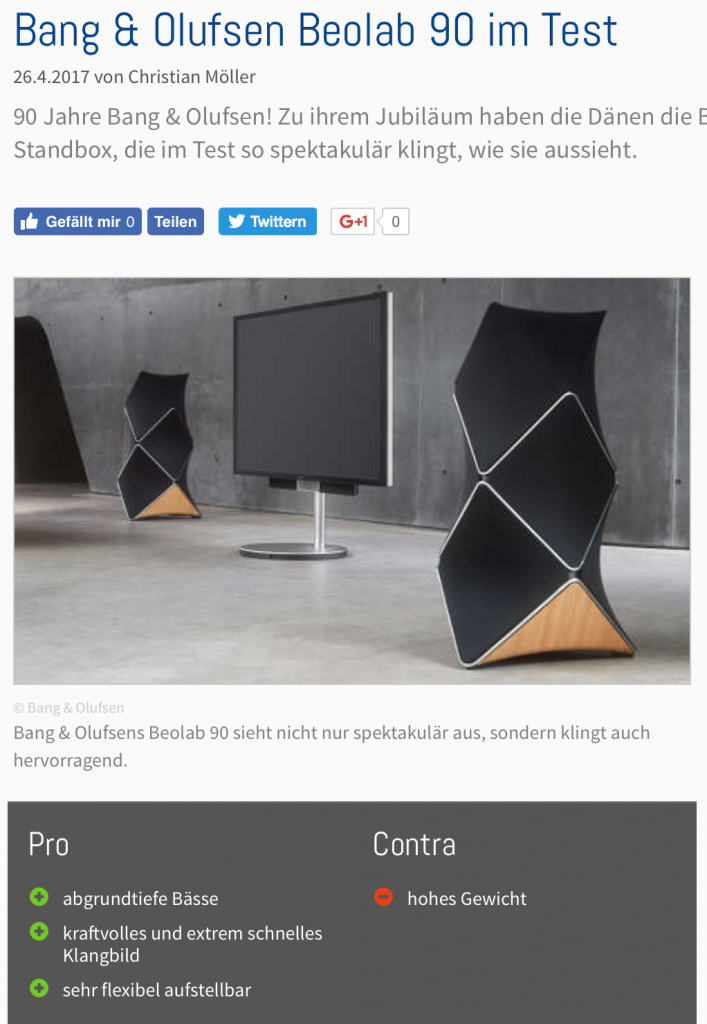

BeoLab 90 review in Germany

One-dimensional answers to a multidimensional question: Part 2

I once read a discussion about microphone placement in an Usenet Forum (it was a long time ago). Someone asked “where is the best place to position the microphones to record a french horn?” Lots of people had opinions, but the answer that I liked most was “that’s like asking ‘where is the best place to stand to take a photo of a mountain?” Of course, that answer might have been too facetious for the person asking the question, but, in my opinion, it was a good analogy. The correct answer, as always, is “it depends” – in this case, on perspective.

Example #1

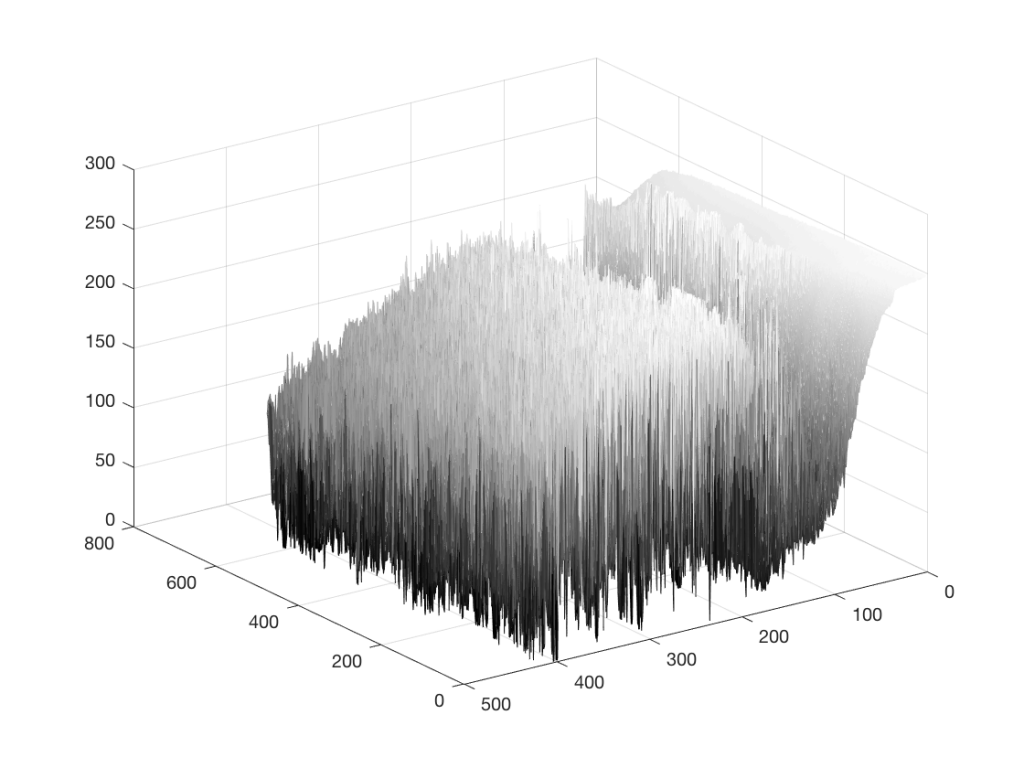

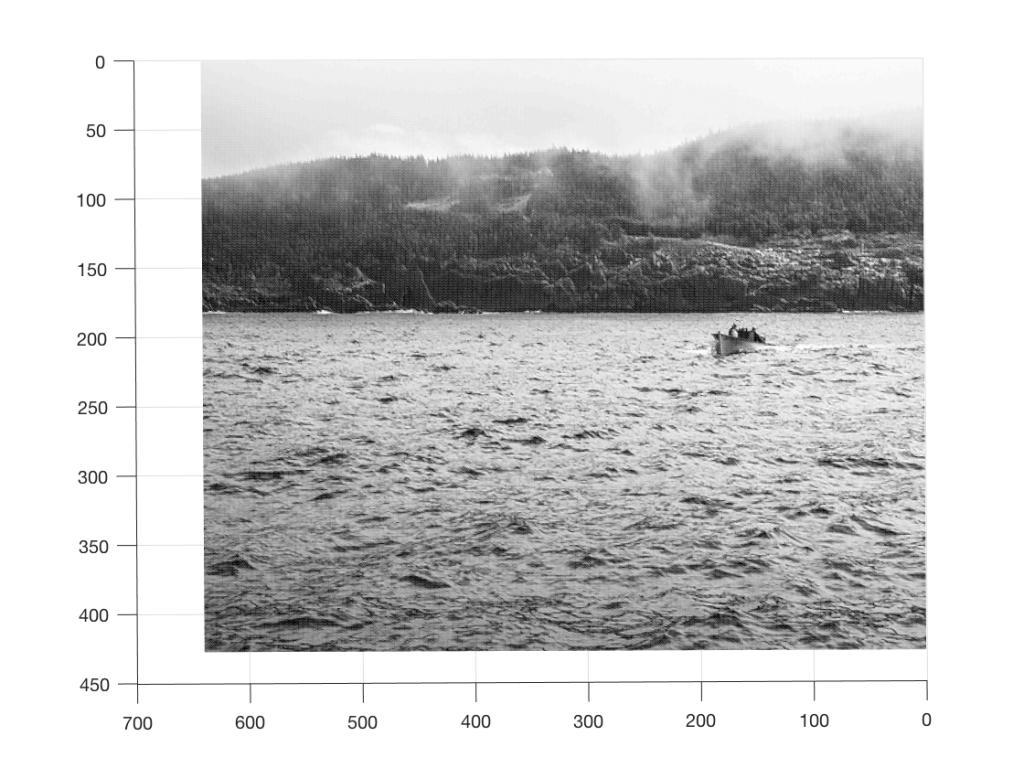

Take a look at the image below.

As you can read in the caption, this is a 640 x 480 black and white photo of a fishing boat off the coast of Newfoundland, near where I grew up, on a foggy day. Of course, if I didn’t tell you that, then it would be impossible to know it – but that’s because you’re looking at the “data” (the information in the pixels in the photo) from the wrong place… I’ll rotate the image a little and we’ll try again.

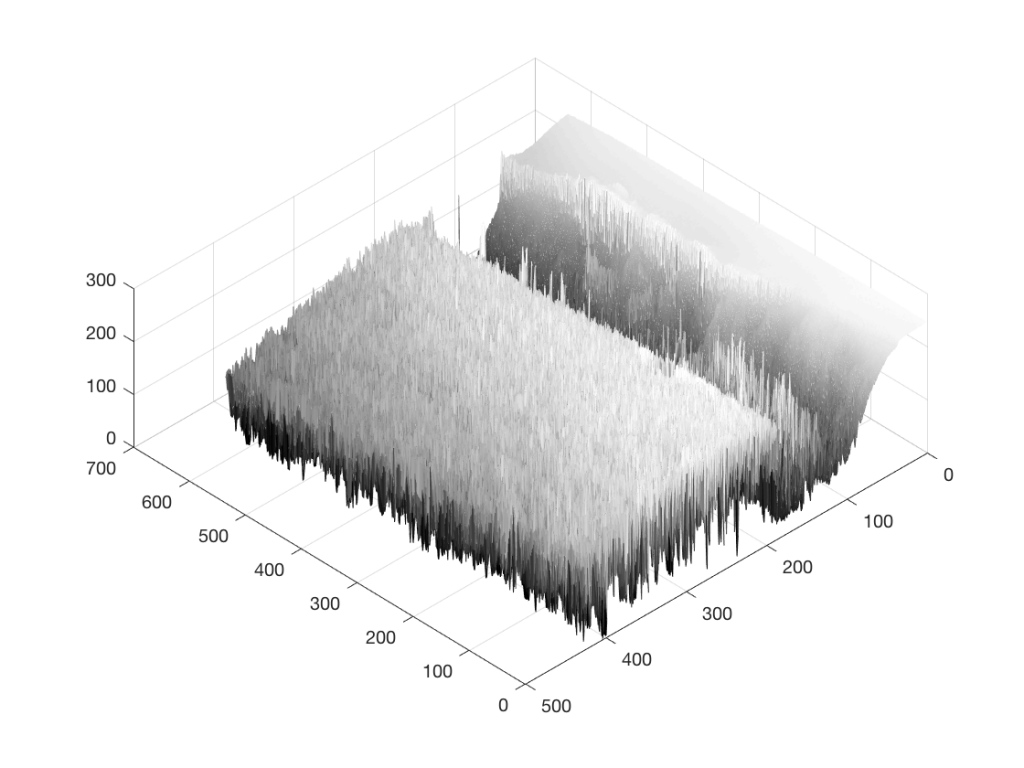

Figure 2 is just a rotation of Figure 1 – we’re still looking at the same photo, but from another direction. It still doesn’t look like a boat… Let’s rotate some more…

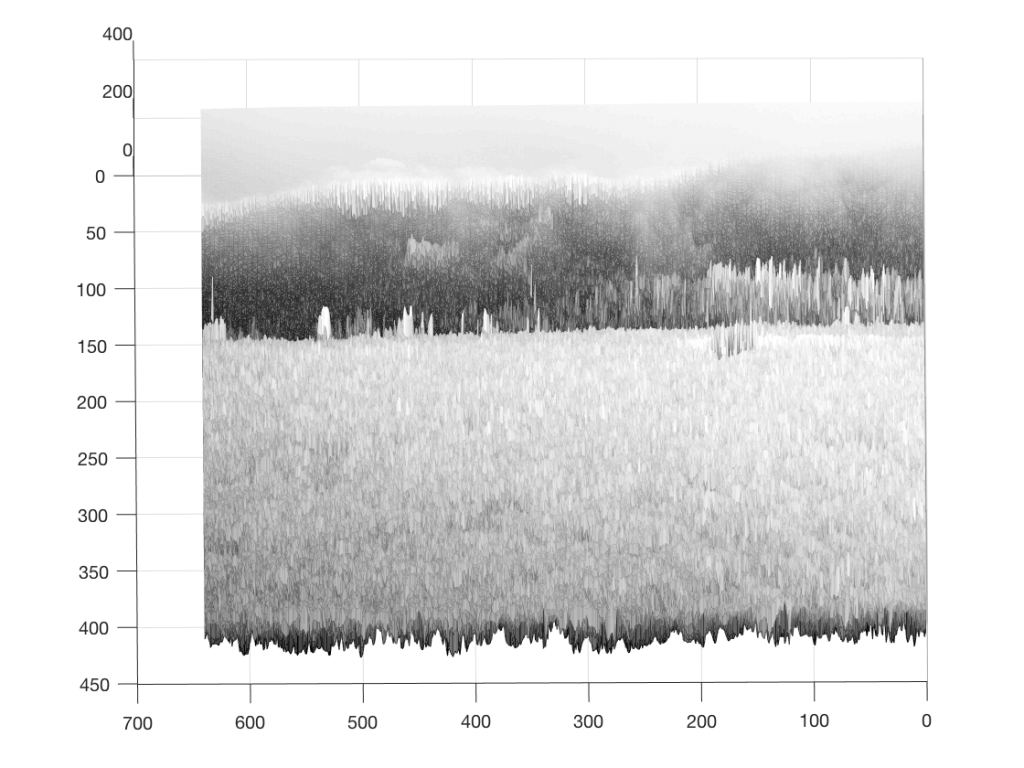

Figure 3 is looking more like something – but there’s still no boat in sight… If you come back to Figure 3 after you look at Figure 4, you’ll recognise the trees on the land, the sky, and the water – you’ll also be able to see where the boat is. But this view of the photo is just off-position enough to scramble the data into being almost unrecognisable. So, let’s rotate the view of the data one last time…

So, what was the point of this, somewhat obscure analogy? It was to try to show that, by looking at the data from only one viewpoint, or one dimension (say, Figure 1, for example), you might arrive at an incorrect interpretation of the data.

Example #2

Watch this video.

In this video, Penn and Teller do the same trick twice. Both times, the trick is impressive, but for two different reasons. This is because your perspective changes. The first time, it’s just a good magic trick – or at least an old one. The second time, you’re impressed because of their skill in executing it. Two different perceptions resulting from two different perspectives.

Example #3

Once-upon-a-time, I taught a course in electroacoustic measurements at McGill University. I remember one class, early in the year, where I started one day by saying “What is a ‘frequency response’?” and one of the students, with a smile on his face replied “The only thing that matters…”

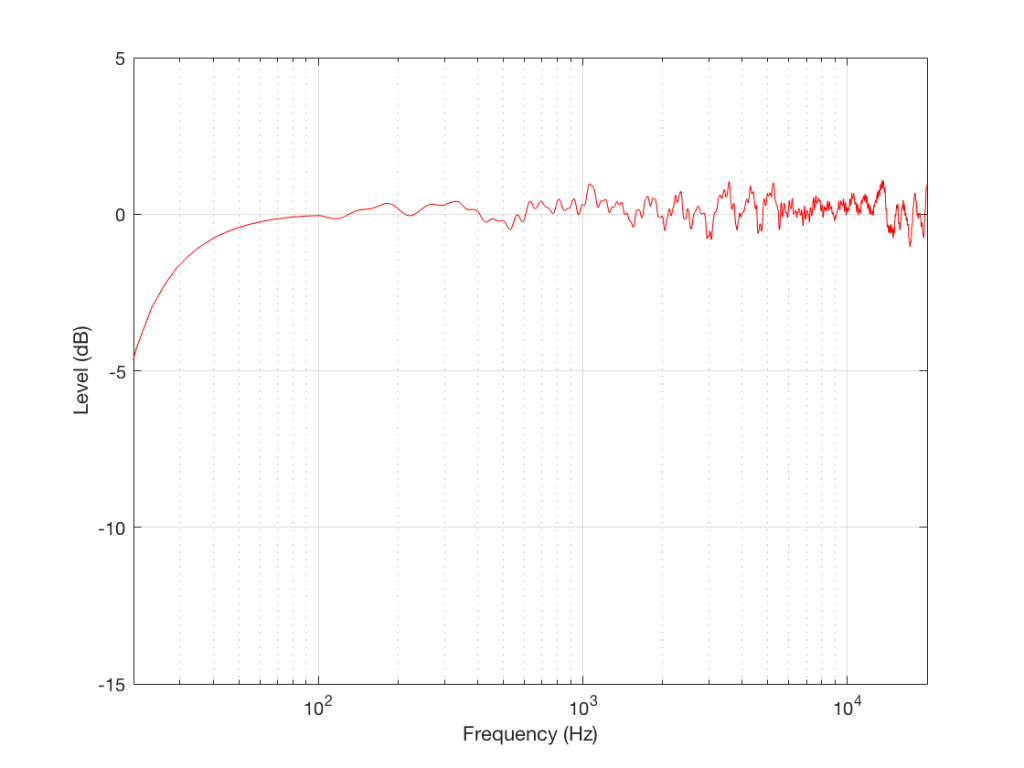

I went through some old data and found a measurement of a loudspeaker. Figure 5, below, shows the magnitude response of a three-way loudspeaker, measured in free-field (therefore, no reflections or influence of the room) at a distance of three meters from the loudspeaker, on-axis to the tweeter.

This is just the kind of measurement that you’d see in a magazine… It’s also the kind of measurement that you’d use to make a “frequency response” for a data sheet. This one would read something like “<40 Hz – >20 kHz ±1 dB”, give or take.

However, let’s think about what this really is and whether it actually tells you anything at all… It’s a measurement of the relationship between input voltage to output pressure, in one place in space, at one listening level, with one type of signal (maybe a swept sine wave or an MLS signal, or something else…), at one temperature of the drivers’ voice coils, at one relative humidity level of the air (okay, okay… now I’m getting into excruciating minutæ…)

However, does this tell us anything about how the loudspeaker will sound? Well, yes. If you use it outdoors in a large field and you stand 3 m in front of it and listen to the same signal that was used to do the measurement. If, however, you stand closer, or not directly in front of it, or if you listen to music over time, or if you bring it indoors, this is just one piece of information – perhaps useful, but certainly inadequate…

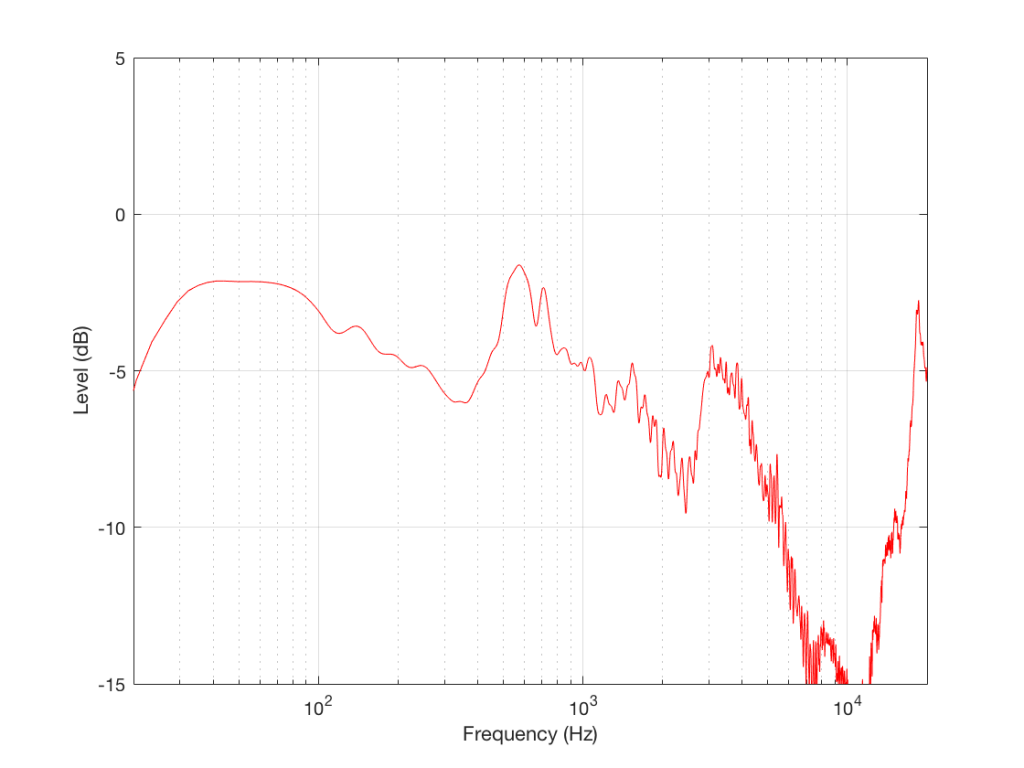

Let’s look at another measurement of the same loudspeaker

As you can see, this loudspeaker’s magnitude response looks “pretty bad” – or at least “not very flat” off-axis (which implies that I just equated “flat” with “good” – which might not necessarily be correct…).

This is the magnitude response of the signal that this loudspeaker will send out the side while you’re listening to that “nice” flat direct sound. Something like this will hit the side wall and reflect back, different frequencies reflecting with different intensities according to the absorptive properties of the wall, the total distance travelled by the reflection, and the relative humidity (okay, okay …I’ll stop with the humidity references…)

As is obvious in Figure 6, this “sound” is almost completely unlike the “sound” in Figure 5 (assuming that a free-field magnitude response can be translated to “sound” – which is a stretch…)

So, just like in Example 1 and Example 2, by “looking” at the data from another direction, we get some more information that should be used to influence our opinion. The more data from the more perspectives, the better…

So, we have one measurement that shows that this loudspeaker is “flat” and therefore “good”, in some persons’ opinions. However, we have a bunch of other measurements that prove that this is not enough information. And, if we measure the same loudspeaker at a different listening level, or at a different temperature, or with a different stimulus, we’d probably get a different answer. How different the measurement is is dependent on how different the measurement conditions are.

The “punch line” is that you cannot make any assumptions about how that loudspeaker will sound based on that one measurement in Figure 5 or the “frequency response” information in its datasheet. In fact, it could just be that having that graph in your hand will be worse than having no graphs in your hand, because your eyes might tell you that this speaker should sound good, and they get into a debate with your ears, who might disagree…

So, without more information, that one plot in Figure 5 is just a plot of one parameter – or one dimension – of many. And you can’t make any conclusions based on that.

Or put another way:

An astronomer, a physicist and a mathematician are on a train in Scotland. The astronomer looks out of the window, sees a black sheep standing in a field, and remarks, “How odd. All the sheep in Scotland are black!” “No, no, no!” says the physicist. “Only some Scottish sheep are black.” The mathematician rolls his eyes at his companions’ muddled thinking and says, “In Scotland, there is at least one sheep, at least one side of which appears to be black from here some of the time.” Link

Recording Studio Time Machine

Probability Density Functions, Part 3

In the last posting, I talked about the effects of a bandpass filter on the probability density function (PDF) of an audio signal. This left the open issue of other filter types. So, below is the continuation of the discussion…

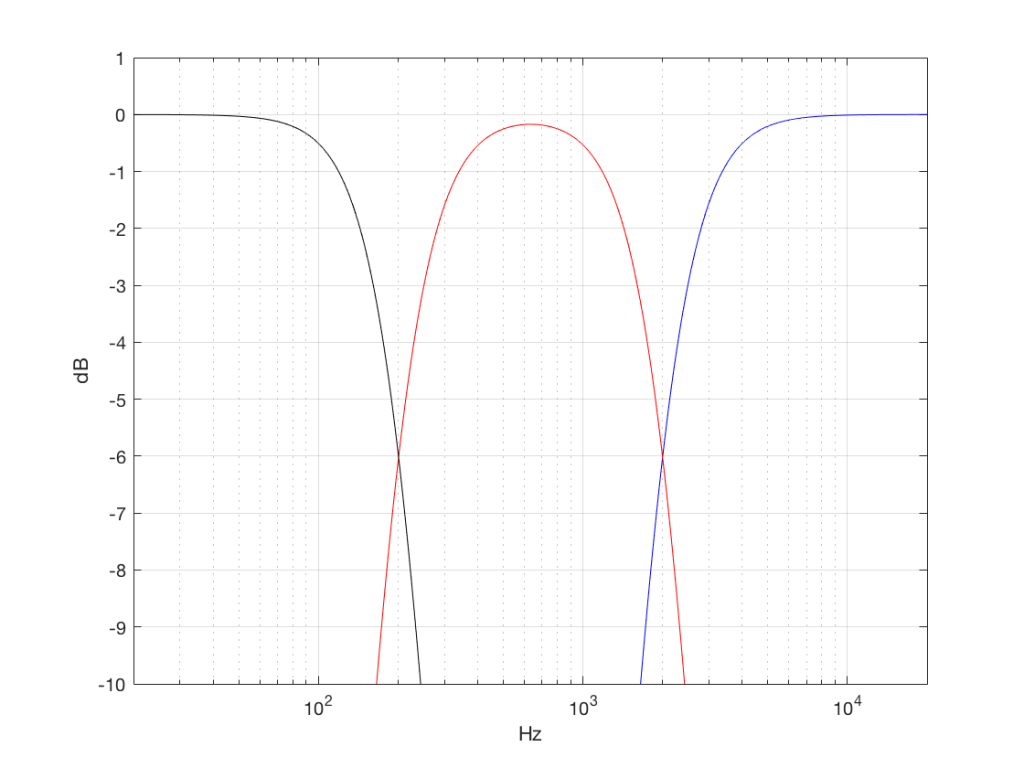

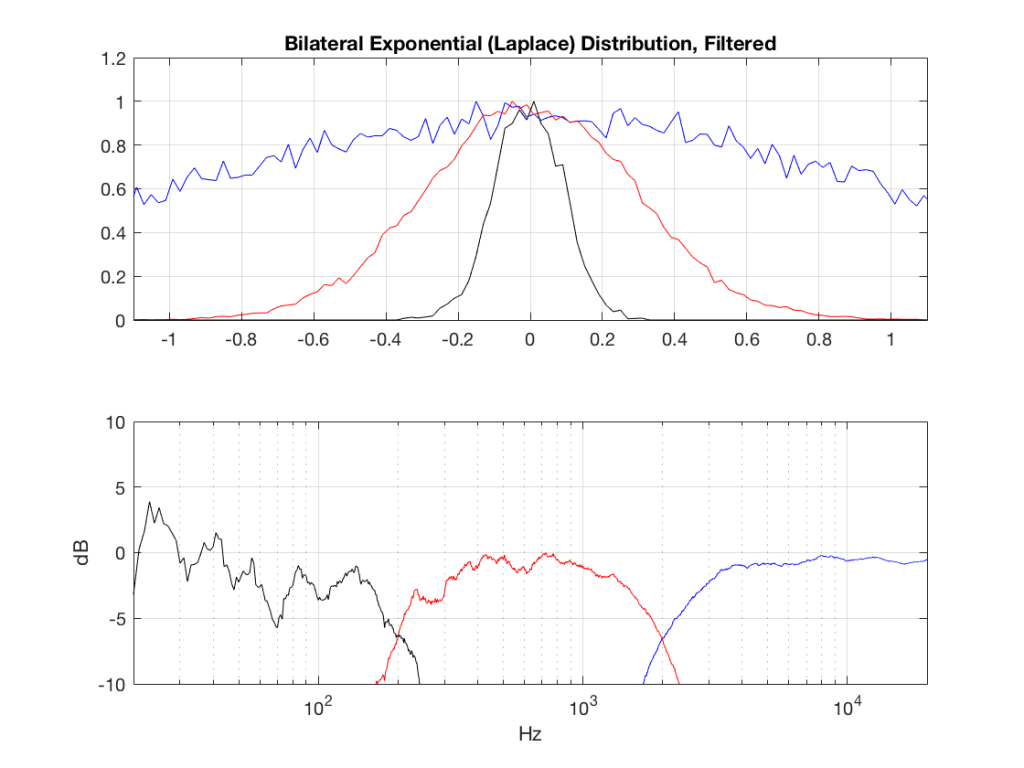

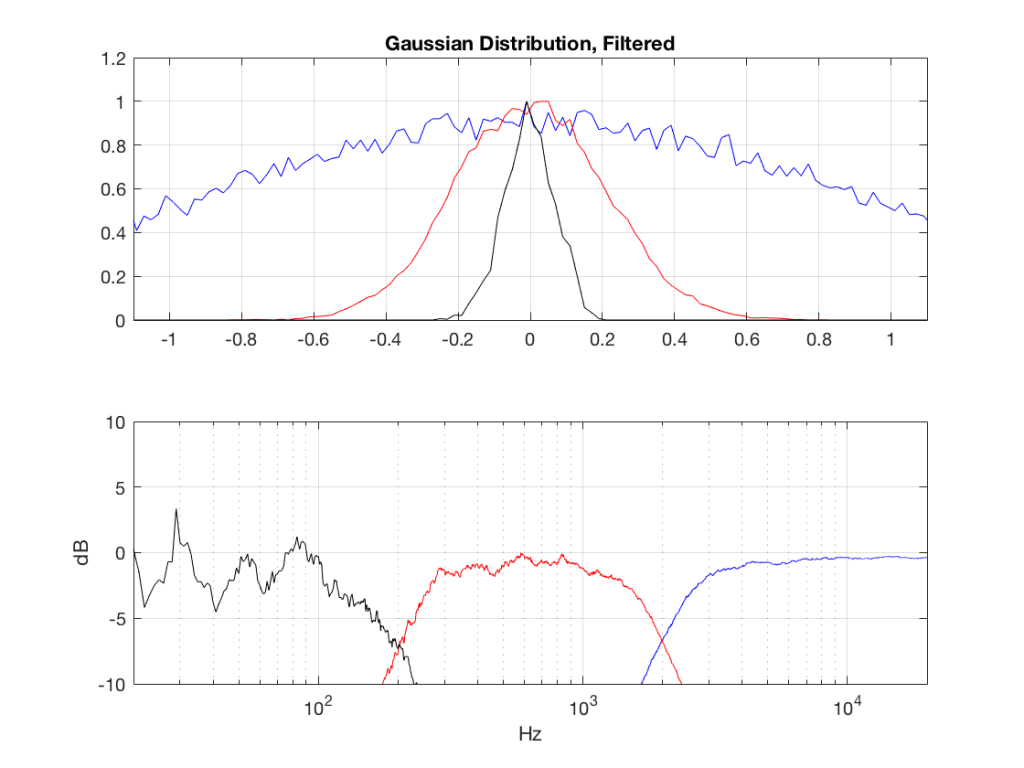

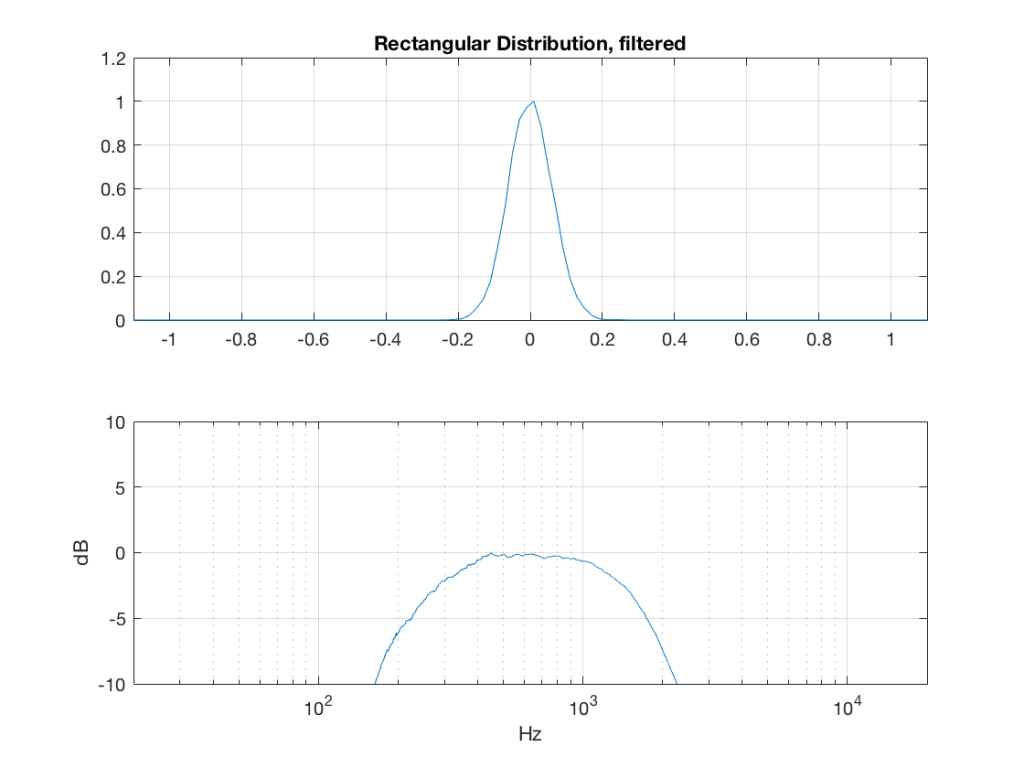

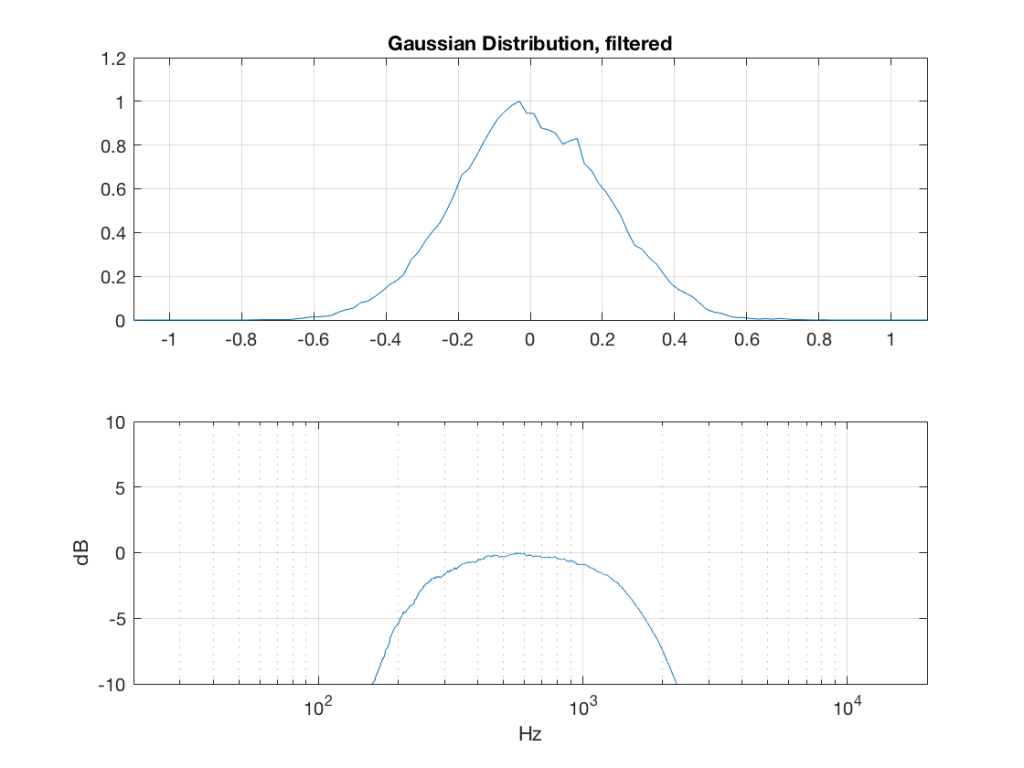

I made noise signals (length 2^16 samples, fs=2^16) with different PDFs, and filtered them as if I were building a three-way loudspeaker with a 4th order Linkwitz-Riley crossover (without including the compensation for the natural responses of the drivers). The crossover frequencies were 200 Hz and 2 kHz (which are just representative, arbitrary values).

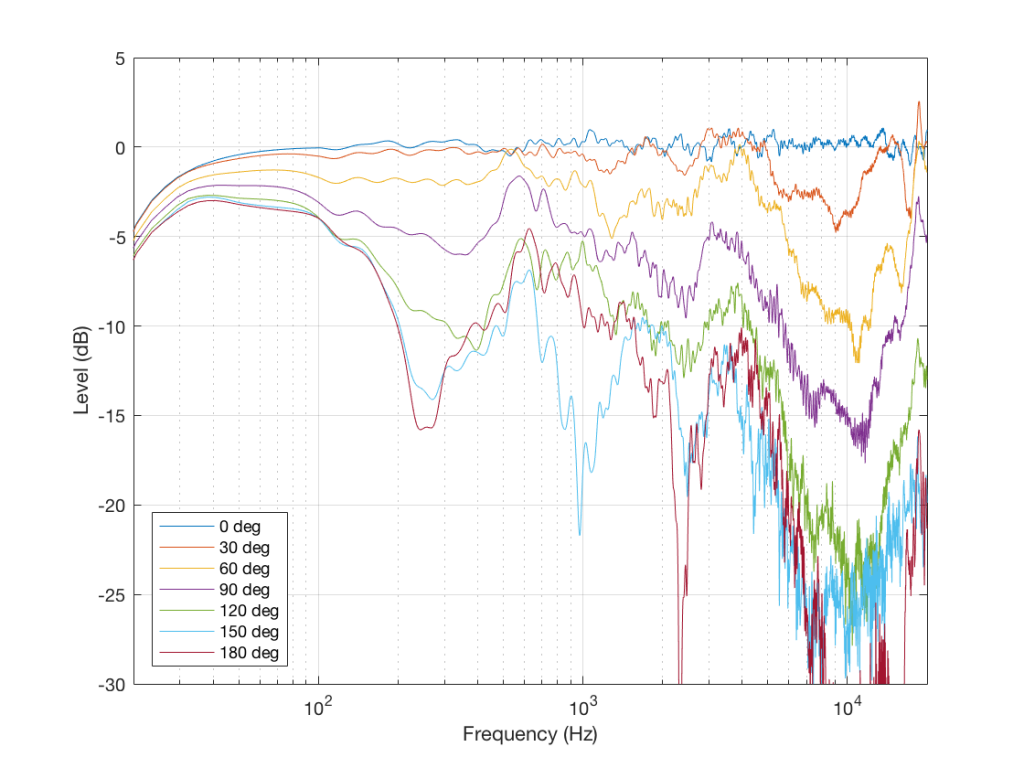

So, the filter magnitude responses looked like Figure 1.

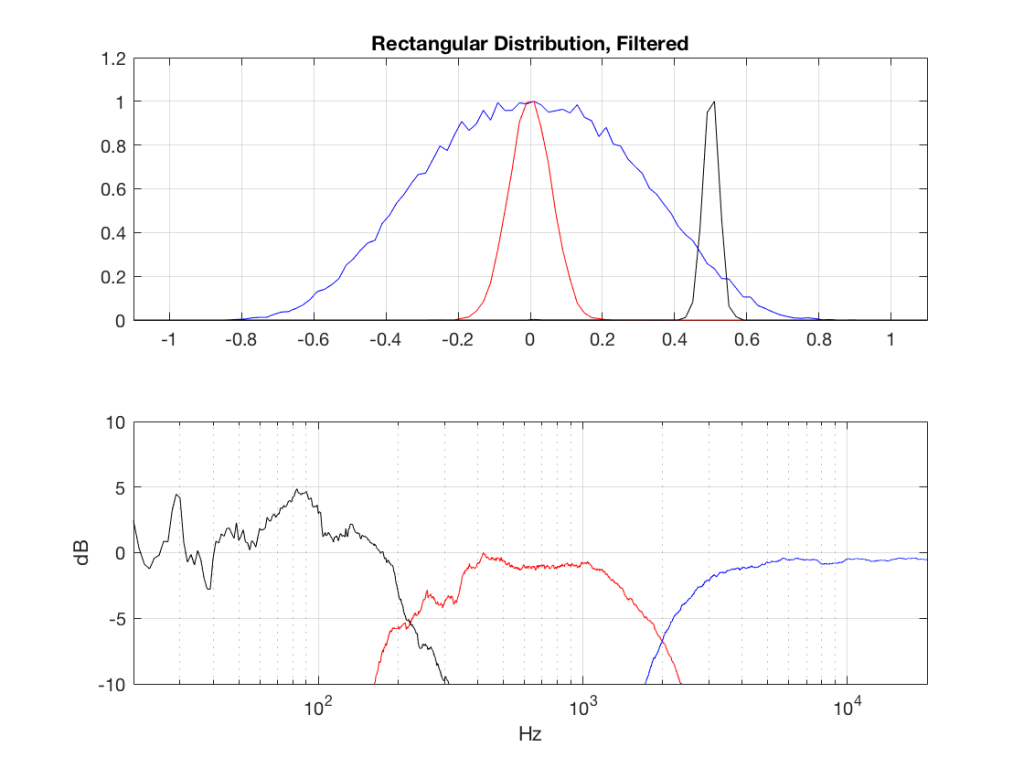

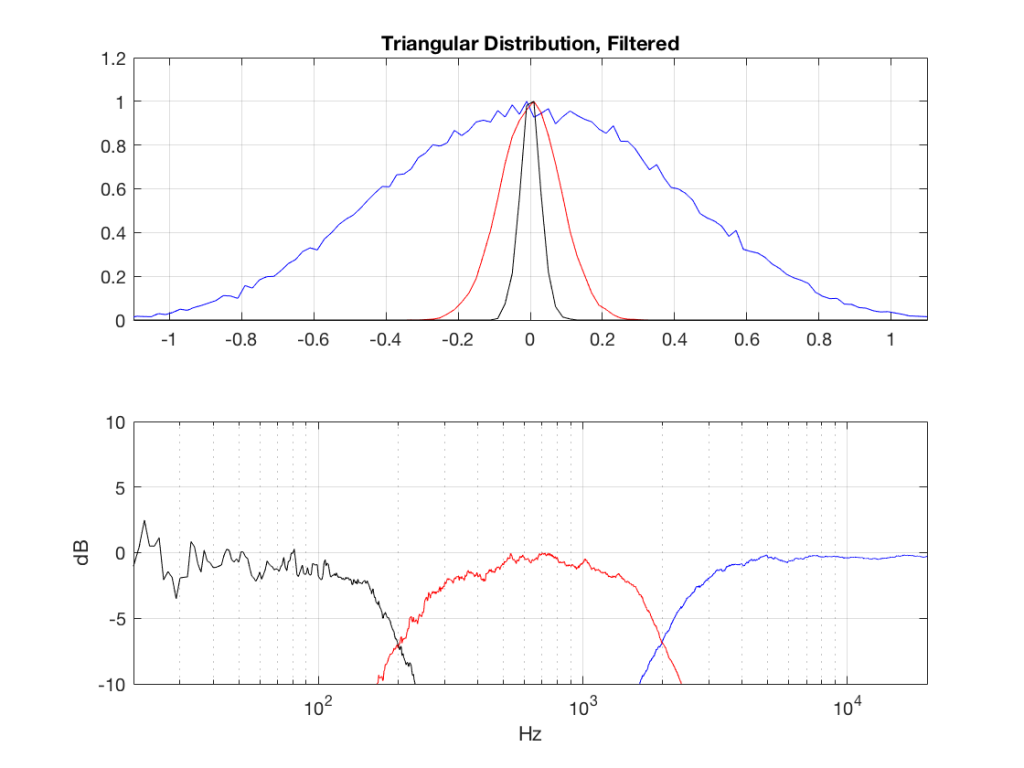

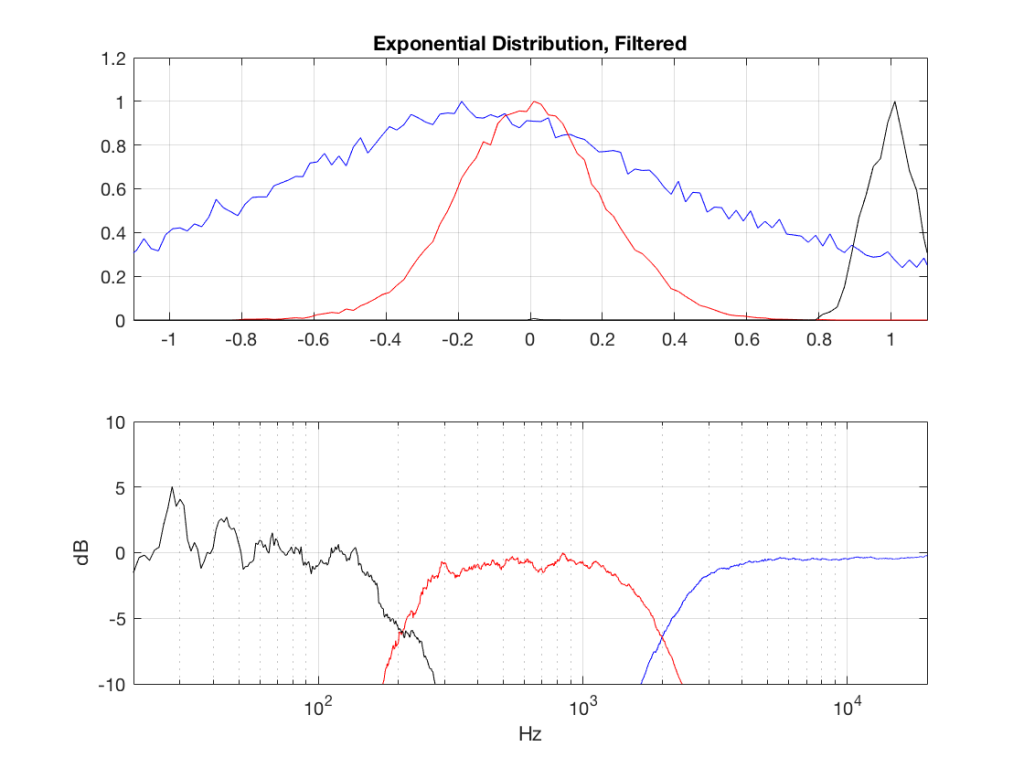

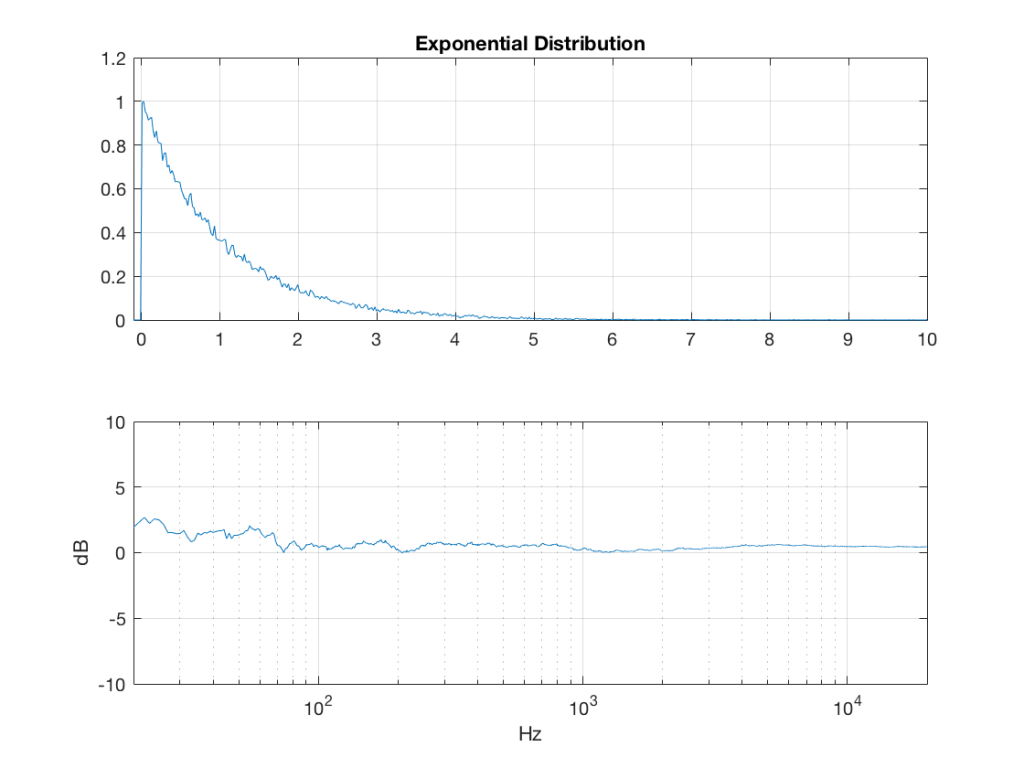

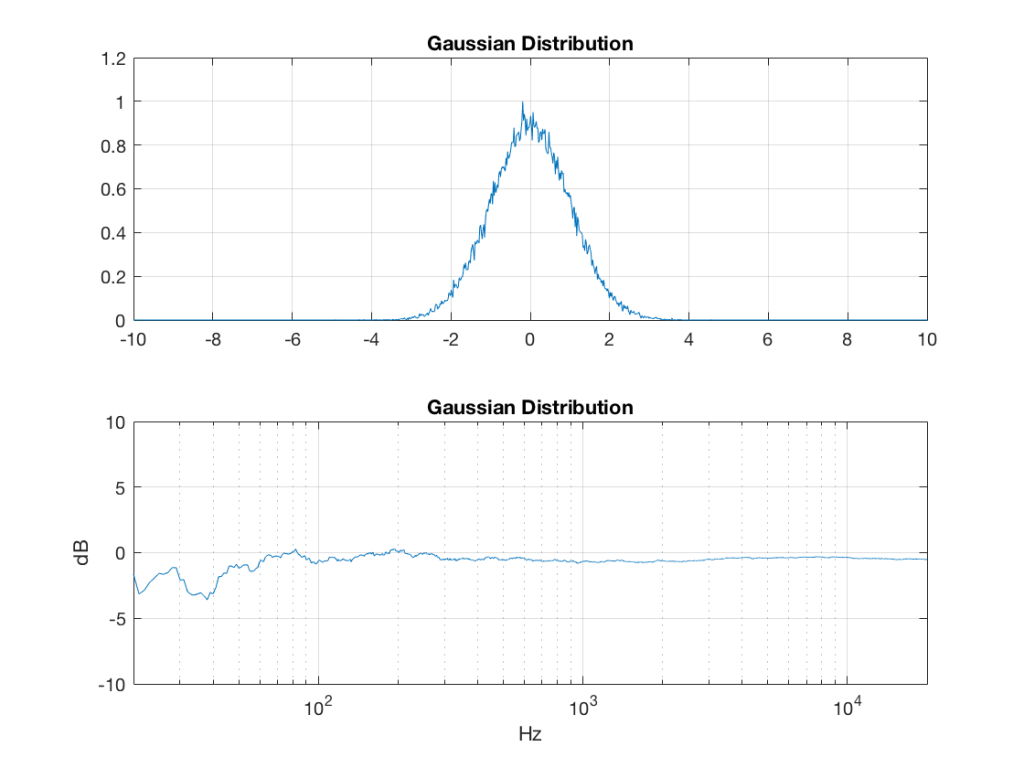

The resulting effects on the probability distribution functions are shown below. (Check the last posting for plots of the PDFs of the full-band signals – however note that I made new noise signals, so the magnitude responses won’t match directly.)

The magnitude responses shown in the plots below have been 1/3-octave smoothed – otherwise they look really noisy.

Post-script

This posting has a Part 1 that you’ll find here and a Part 2 that you’ll find here.

Probability Density Functions, Part 2

In a previous posting, I showed some plots that displayed the probability density functions (or PDF) of a number of commercial audio recordings. (If you are new to the concept of a probability density function, then you might want to at least have a look at that posting before reading further…)

I’ve been doing a little more work on this subject, with some possible implications on how to interpret those plots. Or, perhaps more specifically, with some possible implications on possible conclusions to be drawn from those plots.

Full-band examples

To start, let’s create some noise with a desired PDF, without imposing any frequency limitations on the signal.

To do this, I’ve ported equations from “Computer Music: Synthesis, Composition, and Performance” by Charles Dodge and Thomas A. Jerse, Schirmer Books, New York (1985) to Matlab. That code is shown below in italics, in case you might want to use it. (No promises are made regarding the code quality… However, I will say that I’ve written the code to be easily understandable, rather than efficient – so don’t make fun of me.) I’ve made the length of the noise samples 2^16 because I like that number. (Actually, it’s for other reasons involving plotting the results of an FFT, and my own laziness regarding frequency scaling – but that’s my business.)

Uniform (aka Rectangular) Distribution

uniform = rand(2^16, 1);

Of course, as you can see in the plots in Figure 1, the signal is not “perfectly” rectangular, nor is it “perfectly” flat. This is because it’s noise. If I ran exactly the same code again, the result would be different, but also neither perfectly rectangular nor flat. Of course, if I ran the code repeatedly, and averaged the results, the average would become “better” and “better”.

Linear Distribution

linear_temp_1 = rand(2^16, 1);

linear_temp_2 = rand(2^16, 1);

temp_indices = find(linear_temp_1 < linear_temp_2);

linear = linear_temp_2;

linear(temp_indices) = linear_temp_1(temp_indices);

Triangular Distribution

triangular = rand(2^16, 1) – rand(2^16, 1);

Exponential Distribution

lambda = 1; % lambda must be greater than 0

exponential_temp = rand(2^16, 1) / lambda;

if any(exponential_temp == 0) % ensure that no values of exponential_temp are 0

error(‘Please try again…’)

end

exponential = -log(exponential_temp);

Bilateral Exponential Distribution (aka Laplacian)

lambda = 1; % must be greater than 0

bilex_temp = 2 * rand(2^16, 1);

% check that no values of bilex_temp are 0 or 2

if any(bilex_temp == 0)

error(‘Please try again…’)

end

bilex_lessthan1 = find(bilex_temp <= 1);

bilex(bilex_lessthan1, 1) = log(bilex_temp(bilex_lessthan1)) / lambda;

bilex_greaterthan1 = find(bilex_temp > 1);

bilex_temp(bilex_greaterthan1) = 2 – bilex_temp(bilex_greaterthan1);

bilex(bilex_greaterthan1, 1) = -log(bilex_temp(bilex_greaterthan1)) / lambda;

Gaussian

sigma = 1;

xmu = 0; % offset

n = 100; % number of random number vectors used to create final vector (more is better)

xnover = n/2;

sc = 1/sqrt(n/12);

total = sum(rand(2^16, n), 2);

gaussian = sigma * sc * (total – xnover) + xmu;

Of course, if you are using Matlab, there is an easier way to get a noise signal with a Gaussian PDF, and that is to use the randn() function.

The effects of band-passing the signals

What happens to the probability distribution of the signals if we band-limit them? For example, let’s take the signals that were plotted above, and put them through two sets of two second-order Butterworth filters in series, one set producing a high-pass filter at 200 Hz and the other resulting in a low-pass filter at 2 kHz .(This is the same as if we were making a mid-range signal in a 4th-order Linkwitz-Riley crossover, assuming that our midrange drivers had flat magnitude responses far beyond our crossover frequencies, and therefore required no correction in the crossover…)

What happens to our PDF’s as a result of the band limiting? Let’s see…

So, what we can see in Figures 7 through 12 (inclusive) is that, regardless of the original PDF of the signal, if you band-limit it, the result has a Gaussian distribution.

And yes, I tried other bandwidths and filter slopes. The result, generally speaking, is the same.

One part of this effect is a little obvious. The high-pass filter (in this case, at 200 Hz) removes the DC component, which makes all of the PDF’s symmetrical around the 0 line.

However, the “punch line” is that, regardless of the distribution of the signal coming into your system (and that can be quite different from song to song as I showed in this posting) the PDF of the signal after band-limiting (say, being sent to your loudspeaker drivers) will be Gaussian-ish.

And, before you ask, “what if you had only put in a high-pass or a low-pass filter?” – that answer is coming in a later posting…

Post-script

This posting has a Part 1 that you’ll find here, and a Part 3 that you’ll find here.

One-dimensional answers to a multidimensional question: Part 1

Often, people want simple answers to what are usually complicated questions. “What is the best restaurant in the world?” “Is this new movie worth seeing?” “Is it better for ‘the environment’ to buy cloth or disposable diapers?” “Is this Metallica album any good?”

My rule of thumb to any question like this is that, if the answer is “it depends….” then I immediately trust the person doing the answering. Once upon a time, the answer to the first question was “Noma in Copenhagen” if you believed one rating, but if you don’t like eating yogurt with ants, perhaps you might disagree… Some people screamed with delight when they saw the latest Star Wars trailer – others aren’t into science fiction so much, so they might not be the first in line to buy tickets. It turns out that the cloth vs. disposable is, in part, dependent on geography and natural resources.

However, it’s easy to go to many websites and learn that Death Magnetic is an affront to humanity due to a heavy-handed use of dynamic range compression, and the engineers involved should be on a “most wanted” poster with bounties on their heads…

Personally, I disagree. True, Death Magnetic isn’t the best album to use to show what a wide-dynamic-range recording sounds like – but that’s just one aspect of everything about that recording. Similarly, it would be misguided to say that “Casablanca” is a bad movie because of it’s obvious lack of colours – there are other aspects of that movie that might make it worth watching…

Now, before we go any further, don’t send me emails and comments about this. If you stick with me, you’ll see that my point is not to argue the merits of Death Magnetic. If you don’t like it, I respect your opinion, and I won’t make you listen to it. (And, just for the record, while I type this, I’m listening to an old recording of Schoenberg String Quartets on my headphones – hopefully proof that I’m open to listening to other albums as well…)

My problem with the people I’m trying to make fun of is that they use a single measure – a one-dimensional analysis – to judge the quality of something that has more dimensions. For example, no one ever complains about the overall frequency range of Death Magnetic. Maybe that’s really good (whatever that means).

We can even go further in our mission to pick nits: How, exactly, does one measure or rate the “dynamic range” of a recording? Spoiler alert: this question will not be answered by the end of this blog posting. I just hope to show that answering this question is difficult – and that you shouldn’t trust someone else’s rating…

To start, let’s get back-to-basics. The dynamic range of something is a measure or rating of its loudest level to its quietest. So, we can talk about the dynamic range of an audio device – comparing the level at which it can go no louder to the level of its noise floor (both of which are dependent on the frequency to which you want to pay attention). Or, we could talk about the dynamic range of a conversation over dinner – from the loudest moment in the evening when the drunk, belligerent person was shouting down the table at someone he disagreed with, to that quiet moment early in the evening at 8:20 p.m. when no one at the table could think of something to say.

Let’s take a different recording as an example, since it will make the show-and-tell a little easier.

If I take Jennifer Warnes’s 1986 recording of “Bird on a Wire” from the album “Famous Blue Raincoat” and plot the sample values of the left channel on a linear scale ranging from -1 to 1, it will look like Figure 1.

This shows us some stuff. For example, we can see that there are no samples louder than 0.5 of maximum. We can also see that it’s a bit “spiky” – probably drum beats, if we don’t know the tune. There’s also a part of the tune centred around the 150 second mark that’s probably a bit louder, and a section afterwards that is a bit quieter. However, what is the “dynamic range” of this song?

Attempt #1

One way to answer the question is to compare the loudest peak in the song to the average level (also known as the “crest factor” of the signal). Let’s try that…

Using Matlab, I found out that this is a single sample with a value of 0.4973 that occurs 259.4884 seconds into the tune (sample #11,443,439, if you’re wondering). Then I calculated the overall RMS value of the entire track (the square Root of the Mean of the sample values individually Squared) and found out that it’s 0.0530.

Therefore, our “dynamic range” of this recording is 20*log10(0.4973 / 0.0530) = 19.45 dB

That’s an answer that was easy to calculate. However, to borrow a quote from Pauli: “That is not only not right; it is not even wrong.”

This is because the value of a single sample in a song cannot be considered to be the perceived peak in the music – even if it is the biggest one. In addition, the total average the power of all of the samples in the song means nothing, since you can’t listen to the entire song at once. It’s like saying “what is the elevation of the Alps?” You can do the average, and come up with a number, but it won’t matter much for planning your hike over Mont Blanc…

Attempt #2

One of the problems with Figure 1 is that it shows the sample values on a linear scale, which has little to do with the way we hear. For example, we hear a drop from a linear value of 1 down to a linear value of 0.5 the same as going from 0.5 down to 0.25 – and 0.25 down to 0.125… (I was halving the signal in each of those steps). So, let’s re-plot the values on a logarithmic scale, which is a better visual representation of how we hear the signal. This is shown in Figure 2.

Things look a little less different now – although, to be fair, I have a range of 100 dB on the Y-axis – which is big. Note that the samples with a value of 0 are not plotted on this graph, since they will have a value of -∞ dB, which would require a bigger computer screen. So, if you could zoom in on this plot, you’d see holes where those sample values occur.

Let’s zoom in a little on the vertical axis so that things are a little more friendly.

Okay, so we still see that there’s a quiet section around the 200-second mark, but the loud section just before it doesn’t seem as louder as it did in the linear plot.

There is a quick lesson to be learned here: linear plotting of audio tends to exaggerate the louder stuff. This is because, in a linear world, the area of 0.5 up to 1.0 is half of the plot (if we only talk about the positive world), but in the log world (which our ears live in) it’s only the top 6 dB. (Similarly, if you’re plotting in linear frequency, the top octave on a piano is more important than all of the others combined… which is not true.)

Another way to see this exaggeration is to look at the dip in level at about the 220 second mark. This is much more evident as a “trough” in Figure 3 than in Figure 1, because the low-level samples get equal attention in the log-scale plot.

Why have I mentioned this? It’s because if you go to Youtube and watch videos of people ranting about the “loudness wars” they often do demonstrations where they use compression to reduce the dynamic range of old recordings and play “before and after” results. As they do this, the video shows what looks like a real-time plot of a linear representation of the results. Apart from the fact that it’s likely that you’re actually looking at a scaling of a plot in a graphics editor, and not in an audio editor, the linear representation is not a fair one to show what a compressor does to an audio signal. Note that I’m not making any comment about the content of those videos – I’m just warning you about jumping to conclusions based on the visual representation of the data.

Okay, back to our story… How can we find the dynamic range of this recording?

Well, our second possibility is to look for a loud section in Figure 3 and compare it to a quiet section in Figure 3. The problem is, how do we find those sections? We’ve already established that looking at the loudest single sample in the entire song is a silly idea. So is finding the RMS of the entire track. Let’s try something else. Let’s do a running RMS of the song. This is shown in Figure 4.

Now, one of the problems with an RMS calculation is that part of the calculation is to take the mean (or average) of a bunch of values. However, you have to decide how many values to average – in other words, “how long a slice of time are we taking?” In the plot above, the RMS value (shown in red) is calculated using a 10-second window (which is why it starts at the 5-second mark). For each point on that red line, I took all of the audio sample 5 seconds before to 5 seconds afterwards, squared them, found the mean value, and then took the square root of that. This can give me some indication of the level of the music in those 10 seconds – but we don’t hear all 10 seconds at once… so now, it’s like asking the average height of Mont Blanc (instead of the average height of the Alps). So, this information is still not really useful.

One thing is interesting, though… Look at where the red line says the quietest moment in the song is. It’s not centred around the deep trough at 220 seconds. It’s a little later than that. This is because a narrow, deep notch in the blue signal gets averaged out of existence in the RMS calculation – making it less significant.

Another interesting thing is to note how low the RMS value is relative to the instantaneous values. (In other words, the red line is a lot lower than the top of the blue curve.) This is because the peaks in the signal are short – but that’s not visible because I’m plotting the whole tune. If I zoom in to only one second of music (in Figure 5) this becomes more intuitive…

So, let’s reduce our RMS time constant to see what happens…

Now we’re starting to see a little more variation in the RMS signal, which might be a little closer to the way we hear things. But it’s still not a good representation because the RMS time window is 1 second – and we don’t hear 1 second of music simultaneously either…

So, how much time is “now” when we’re listening to music? Some people say 30 milliseconds is a good number, since anything outside that window can be heard as an echo… This is a mis-interpretation of a mis-interpretation, but at least it gives us a number to put into the math, so let’s try that.

Now, if you squint just right, the red curve basically looks like a lower copy of the blue curve, so it’s probably not useful at this magnification. Let’s zoom in to see if it’s makes more sense

Enough… Hopefully you can see where I’m headed with this: no where… The simple problem is that, in order to find the dynamic range of a piece of music, we need to compare the loudest moment to the quietest moment. Unfortunately, none of what I’ve shown you so far is a measurement of either of these two values.

We can do other things. We can band-limit the signal (since neither 20 kHz nor 20 Hz have much contribution to how loud something sounds). We can play with time constants (maybe using a short one to invent a number for the peaks, and a longer one for the quiet moments). We can do other, more complicated stuff, including applying filters (simple ones like the ones used in the ITU-1770 standard, or complicated ones like the ones suggested by companies who make gear).

We also have to consider whether our perception of how loud something is is related to the average level or the peak level (JJ points out in his lecture at about 20:38 that different persons will disagree on this…).

Ultimately, we get to a point where we have to say that a single number to represent the dynamic range of a song is a nice number to have, but probably not related to how you perceive it. And if it is, it might not be related to how I perceive it…

And, in the end, the REAL message is that it doesn’t matter. Deciding that a recording is “good” because it has been rated to have a wide dynamic range by someone’s calculation is like saying that “Casablanca” isn’t a good movie because it doesn’t have any colours in it – or choosing a restaurant based on a single rating from one critic. No matter what the rating is, if you don’t want to eat live ants crawling on yogurt, you should probably go somewhere else…

Addendum: Before you comment on this posting, please go and watch the two lectures by JJ here and here. If the content of your comment indicates that you have not watched these two lectures, I will probably delete it from my website. You’ve been warned… I will also delete your comment if you mention Death Magnetic – if you want to talk about that, make your own website. You, too, have been warned.

B&O Tech: BeoLab 90 – How to set up Active Room Calibration

#64 in a series of articles about the technology behind Bang & Olufsen products